- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Intel Releases Ultra-Fast X25-M SSD

Unless there is some sort of revelation with regards to the traditional hard drive soon, SSD is likely going to become the future of storage. If you ask a hard drive vendor, they’ll tell you that SSD might be the future, but the traditional hard drive will still be the ultimate choice. It’s hard to disagree, since right now, you can score a 1TB drive for under $200, whereas a 64GB SSD is 50% higher than that.

What’s helping the progression is fierce development though, especially from Intel. They offered insight into their SSD’s a few weeks ago at IDF that laid out a great outlook, and today, we see the launch of one hardcore-fast product, the X25-M 80GB SSD.

Our friends at the Tech Report have taken the new drive for a spin, and overall their opinions are good. The drive has top-of-the-line read speeds, but lacks quite a bit where write speeds are concerned, common of MLC-based drives. The end result was around 70MB/s write, which pales in comparison to some hard drives out there. Hopefully we’ll see this issue tackled over the course of the next year, because it’s an important one on a product that costs 10x more per GB.

Price is another problem for solid-state drives, and with the 80GB X25-M slated to sell for just under $600 in 1,000-unit quantities, Intel’s first entry in the market won’t be cheap. At that price, the X-25M sits between budget MLC-based models and their more expensive SLC-based cousins, which seems about right to me. After all, the X25-M was often faster than Samsung’s SLC-based FlashSSD, which costs nearly $800 for only 64GB.

Is S3’s Chrome 440 GTX Worth a Look?

ATI and NVIDIA… that’s who usually comes to mind when we think of graphics processors, and it’s obvious why. Both companies offer sensational products when compared to the competition, and unless Intel’s Larrabee really does kick the serious ass that they are claiming it will, then the GPU reigns will remain with both ATI and NVIDIA.

But what about S3? They’ve been unable to take over either the green or red side, but it’s hard to when their products are all of the value nature. techPowerUp takes a look at their new 440 GTX, but don’t let the name fool you. GTX might usually represent a standout card, but the 440 GTX falls well below even the Radeon HD 3850 in terms of overall specs, and comes in at $69.

So, how did it perform? Well, not so bad actually, but it’s still going to be a hard decision to choose it over the competition. For $70, you can get a comparable ATI or NVIDIA card with better driver support, but if you must simply own something other than ATI or NVIDIA… your choices are already a bit limited, right?

The card has one analog VGA, one DVI and one HDMI port. This configuration is certainly the optimum for a card in this segment. VGA to allow CRT users to use this card without going through the hassle of an adapter. Dual-link DVI for the LCD users and HDMI for all media PC scenarios. Just like ATI, S3 has integrated an audio device on their card, so your big screen will receive an audio stream from the graphics card without the needs of an external audio in cable.

Intel Roadmaps Shows Lack of CPU Launches in 1H 2009

If you enjoy looking at huge diagrams inside of PDF files, then you are in luck. Japanese site PC Watch somehow got a hold of a large collection of PDFs that outline Intel’s future plans for a variety of product-lines, from processor, chipset and beyond.

After a quick look through a few of the different documents, I didn’t notice anything extremely out of the ordinary, but the diagrams definitely verify a lot of what we were thinking. The first Nehalem launch parts are still clocked at 3.2GHz, 2.93GHz and 2.66GHz, with follow-up parts in… Q3 2009? Yes, you read that right.

In Q3 of next year, Intel will launch a handful of new processors, including mainstream Quad-Cores and Dual-Cores, although none of the roadmaps include exact clock speeds yet, understandably so. Nehalem’s 32nm successor, Westmere, is still slated for 2010, which is when we’ll begin to see desktop six-core processors. It’s clear to me that Intel is no longer toying with the idea of an Octal-Core, at least right now, which I’m sure most people will be fine with.

For a real time-killer, definitely head over to that link and download all of the documents. It is sure to keep you busy for quite a while.

Price line and say, the first to introduce quad-core version of the “Bloomfield (Bloomfield)” is the top brand Extreme system SKU (Stock Keeping Unit = products) 3.2 GHz (8MB/QPI 6.4Gtps/DDR3-1066 ), The traditional top-level price of 999 dollars. Core 2 Extreme QX9650 (3GHz/12MB/FSB1333) the same level, a special high-cost QX9770/9775 (3.2GHz/12MB/FSB1600) from the 1399/1499 dollars is cheap.

Concept Art for Larrabee’s Launch Title Released

Intel’s upcoming Larrabee architecture (which we’ve looked at in-depth here) is still a long way off, but that isn’t stopping the company from teasing us with concept art from Project Offset, whose game developer they acquired earlier this year. You can see one of the artsy shots below.

The multi-core ‘GPU’ is still a mystery for the most part, because while we understand the basic architectural benefits, we can also see potential downsides. So even with concept art, it in no way gives us insight into what to expect from the GPU or the game, but it’s certainly looking good so far. Older teaser videos of Project Offset were amazing, and if Larrabee can power something like that, then Intel might not have such a hard time selling their product after all.

To coincide with these images, the game’s developers will be relaunching their community forums, both for comment on these new pieces of concept art and also to discuss the direction of the game. Why they were closed in the first place is anyone’s guess, but at least now we’ll be able to keep better track of the game, and hopefully begin to understand exactly what Larrabee is capable of.

The other piece of news Offset and Intel will announce on Monday is the relaunch of Offset’s community forum at 10 AM PST. We hear the Offset team is “excited” to be back online and touch base with users again. That is, of course, because Offset hopes to get user feedback on the game title it is developing.

Do Monster Cables Actually Serve a Purpose?

Whenever I hear the word “Monster Cable”, I clench my fists, because I know what kind of rip-offs their products are. It can be debated, but it’s been proven before over and over that a standard cable is almost always just as effective, as long as you are dealing with cable in feet and not meters (most people don’t need meters).

Well, could I be wrong? CrunchGear took a jaunt to CEDIA over the weekend and noticed Monster was walking around with a mobile station, showing people how much better their cables are over the competition. They compared all the cables with one heck of a video noise analyzer, and lo and behold, they did prove that their cables are better.

But while their cables did indeed prove their worth in being able to transfer much more data than the competition, the fact remains that this kind of bandwidth is generally just not needed for the vast population. There is a cutoff, but most people aren’t going to see video noise. However, I will admit it’s nice to see that Monster does indeed have a product designed for the enthusiasts, but it still seems to only apply with long cables (longer than 10 feet).

The way the engineer explained it to me is that viewers will not notice the difference in short runs because of the loss can be compensated by the HDTVs processors. Apparently, with longer cables, the extra noise is more noticeable and therefore the need for higher-quality long digital cables arise.

AMD to Launch 45nm Quad-Cores in January

As I mentioned in today’s pricing guide, the choice between current AMD processors is a simple one, simply because their entire collection of models is priced so close to each other, there are really only two choices. Well, we’ve all been waiting for AMD to pull something out of their sleeve, but it sure can’t come soon enough. What’s next is their 45nm revisions, and according to certain leaks, that might happen in January.

It’s just too bad that January is four months away, and it’s made even worse because we were supposed to see these CPUs already. AMD was originally quoted as being able to deliver their die shrinks in the middle of this year, which obviously didn’t happen. So, it’s still going to be a little bit of a wait, and it’s too bad, because there’s little hope that a simple die shrink is going to help AMD gain the traction they need. It’s not good when their most expensive processor is $180.

With Intel’s Nehalem right around the corner (~two months), AMD is really going to have to innovate to push out a product in the near-future that’s going to make Intel even bat an eye. While it’s far too early to write 45nm AMD off, as long as they are priced competitively, they may very-well compete nicely with Intel’s current offerings. One of the biggest caveats with AMD processors right now is power efficiency, and with the die shrink, that should improve. January is going to be an interesting month.

Getting back to next-gen Phenoms, the roadmap lists two chips due for January: one clocked at 2.8GHz and another pegged at 3GHz, both with 125W thermal envelopes just like the existing Phenom X4 9850. Unless Intel’s plans fall through, the new Phenoms may face similarly clocked Core i7 processors with more threads per core. AMD may well aim for lower prices, though.

Ubuntu Usage Reaches 8 Million

It’s official, Linux is growing fast, and it has numerous sources to thank. Aside from the desktop, millions of mobile devices are running some form of Linux, like the Eee PC, so that in itself is helping to improve the scene. On the desktop side though, Ubuntu’s commitment to the community has really helped skyrocket usage in the home, and according to one stalker of Canonical’s marketing materials, the distro now has around eight million total users.

How this number was produced is anyone’s guess, but users are speculating it’s done by calculating the number of people using the apt-get command and are connecting to Ubuntu’s own repositories. Either way, eight million is an impressive number, especially for an operating system that’s completely free and is so different from Windows. Linux still has a long way to go compared to Windows and OS X, but things are at least readily improving.

Alas, measuring Ubuntu’s active installed base can be tricky since a single copy o the operating system can be freely installed over and over again on multiple systems. And in mid-2007 during the Ubuntu Live conference, Ubuntu/Canonical founder Mark Shuttleworth estimated the operating system’s installed base at 6 million to 12 million users.

Finding the Right CPU For You

Looking to build a new machine, or just upgrade the processor in the one you already have? Be sure not to miss our latest article that’s goal is to help you in your search. Depending on whether you want an AMD or Intel processor, the choices might be complicated. Intel offers so many different models, that it’s both hard to decide on one, and it’s easy to be suckered into buying one that doesn’t live up to the one you should have bought.

Thanks to recent AMD price drops, there is really only two choices on that side, so your decision is made extremely easy there. Their lowest-end triple-core can be had for a measly $102, while their highest-end offering is being offered for around $180. It’s really hard to go wrong with either, depending on what you are looking for.

The other sticky part of the equation is knowing that you can sometimes get a better processor for almost the same price. Take the Q9450 and Q9550, for example. $5 apart, but one is a nice upgrade over the other. We take all this into consideration in our article though, so if you need a new CPU, don’t miss it.

Our choice for recommended CPUs are based off of our expertise. We stalk new CPU releases, benchmark them the life out of them in our labs and also keep a close eye on pricing, as is evident by our news section. Because of the sheer number of processors available on the market, we don’t include all of them here, but rather pick different models are varying price-ranges that seem to make the most sense.

Source: Fall Processor Pricing – Finding the Best Bang for the Buck

OCZ’s 60GB Core V2 SSD – $239 after MIR

It’s become obvious to me over the past year that our standard hard drives is one of the reasons our computers remain slow during some heavy-duty work. On my own PC, I have a fast HDD, but even copying a 10GB folder will render the PC slow for the entire duration. And that’s on a nice Quad-Core machine with 4GB of RAM! That’s why SSDs are likely to be the future, and why interest is high.

Though I haven’t played around with SSDs myself, I’ve seen demos at conferences that have been extremely impressive, especially if you pair a few together in RAID. Copying a file at an actual 1GB/s? Yes, please! The problem a year ago though, was that any SSD was far, far too expensive. Luckily, prices are dropping at a record pace, and some of them are actually becoming somewhat affordable, like OCZ’s Core Series V2.

Our friends at DailyTech happened to notice that NewEgg is now stocking these drives, and the 60GB model is currently selling for $239 after MIR… much easier to stomach than what it would have been just a few months ago. The V2 boasts read speeds of 170 MB/s with write speeds of 98 MB/s… very impressive. I’ll still sit back and wait for prices to drop even further, but it’s going to be a great day when we can all fill up our own desktops with such drives. It’s only a matter of time.

For comparison, original 64GB Core Series SSD (OCZSSD2-1C64G) is priced at $264 on Newegg. Like its newer brother, the older model also comes with a $60 manufacturer rebate which drops the price to $204. The older 64GB SSD feature slower read and write speeds of 143MB/sec and 93MB/sec respectively. It also lacks the built-in mini-USB port for firmware upgrades.

Mythbusters Were Allowed to Air RFID Segment?

Yesterday, I posted about Adam Savage and his talk given at the The Last HOPE conference back in July, where he talked about the Mythbusters show’s inability to broadcast a segment on RFID… and well, it seems there are a few inaccuracies there. All it took was an explosion of this talk all over the web to find out!

According to a new C|Net post, an RFID segment actually did air, although I don’t recall ever seeing it. By how it seems, it only aired once, but chances are it wasn’t, given how often the Discovery Channel plays old episodes. Adam also is now backtracking:

“If I went into the detail of exactly why this story didn’t get filmed, it’s so bizarre and convoluted that no one would believe me, but suffice to say…the decision not to continue on with the RFID story was made by our production company, Beyond Productions, and had nothing to do with Discovery, or their ad sales department.”

While the episode did air, it apparently didn’t talk about any insecurities, so I’m really not sure what ‘myth’ was being covered. Call me a conspiracy theorist, but something still doesn’t seem to add up. If they are indeed able to cover RFID, maybe they should do it again, this time covering the fact that it’s an insecure technology. Who out there thinks that’ll happen?

From his statement, it’s also logical to conclude that when he told the Last HOPE audience that co-host Belleci was on the conference call, he had meant Grant Imahara, another MythBuster co-host. Further, a Discovery Channel representative told me that MythBusters did end up running an episode, last January, on RFID, but that the issue of the technology’s security holes was not addressed.

Pioneer’s Flagship Blu-ray Player Restores Movie’s Original Color

Who said Blu-ray was a dying format? Sure wasn’t me, and according to Pioneer, it must not be. Why? Because somehow they imagine they’ll be able to sell a $2,199 Blu-ray player, that’s why. But let’s not discredit it just yet… it might have a few features that really do make it the ultimate player around.

What makes the Elite BDP-09FD player so special is because it includes a dedicated chip that restores movies to a 16-bit deep color range, which is in a sense, a recovery method. When movies are left in their raw format, (master copies), the colors, tones and hues can differ from post-production movies. This chip is supposed to restore those and deliver a much richer and accurate image.

Whether or not it actually works or not, who knows, but if you happen to have a $2,200 bill lying around and feel ambitious, by all means, go for it. Besides the ‘restore’ feature, Pioneer also promises this player to become the best DVD upscaler ever. Still think it’s too expensive? Well, you are not their target audience, as Senior VP at Pioneer notes, “If you really think about price, price, price, you will miss out on the performance enthusiasts, and that’s who we want. They can do a really nice job of pushing the technology.“

The Elite Blu-ray Disc player also features Pioneer’s proprietary Adaptive Bit Length Expansion technology, which allows the purest color representation of a movie’s original studio master. Through a dedicated chip, the player restores Blu-ray Disc movies, produced in 8-bit color back to the vivid 16-bit deep color gamut resulting in an HD picture filled with hues and tones that replicates the cinematic intentions of the films’ creators.

LetsCall.me – How to Accept Calls Anonymously

Being a big security buff, I love finding out new ways to remain anonymous to a certain degree, but the problem is that nowadays, it’s almost impossible. But, there are always ways to make things better, and if you happen to want to keep your phone number hidden, then there’s a new web service for you.

Called LetsCall.me, the service allows you to create a quick account, with an identifier that you’d like to appear in the URL. From there, you can pass that URL to anyone of your choosing, and they’ll be able to put in their phone number, which you will be able to see. From that point, LetsCall.me will connect to your phone, and if you pick up, their phone will ring. Once they pick up, voila, you’re connected, all while having your phone number hidden.

The cool thing is that this is a free service, and it seems to be a great way to keep your phone number hidden from someone you don’t know that well, or will only deal with once. Unless you are Kevin Mitnick, chances are you’re going to be unable to reveal the recipients real number, but hey, anything’s possible, right? It’s services like this that make the Internet so rich. The phone company could only hope of providing a service this cool, and if they did, they’d undoubtedly charge for it.

Benefits of LetsCall.me: Accept calls anonymously without revealing your phone number, No caller id blocking – always know who’s calling, Block unwanted callers, Easier to remember than a phone number, Great for Craigslist and other internet sites, Be safe – don’t give out your phone number, use LetsCall.me

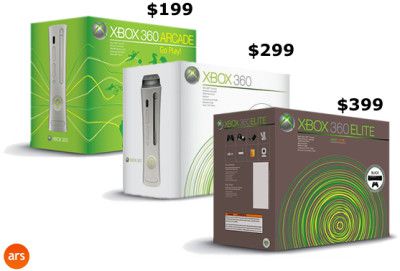

Xbox 360 Prices Drops in Effect Tomorrow – $199 for Arcade

“Whoa!” That’s all that can be said about the upcoming price drops on the Xbox 360 from Microsoft, because they’re making the Arcade version of the console so inexpensive, that the Wii ends up costing more! That model of course doesn’t include a hard drive among a few other things, but it’s still a very affordable cost-of-entry.

Starting tomorrow, the price of the Arcade version will hit $199, while the Pro (60GB) will hit $299, which by itself, is still only $50 more than the Wii, but far more powerful. That will likely be the preferred choice for any new buyers, as the included hard drive is a almost a requirement in order to enjoy any of today’s games. The HDMI support sure doesn’t hurt either.

The Elite still remains at $399, although I’m unsure exactly why it still costs so much more than the rest. It also includes HDMI, and an upgraded 120GB hard drive and… that seems to be it. It’s painted black though, which might be worth the premium to some (it was to me back in the day, for some reason). Still, the first two models come in at great prices, and I’m still impressed to see that the Wii costs more than the Arcade version. Though I might just be easily impressed, maybe.

“We are thrilled to be the first next-generation console on the market to reach $199, a price that invites everyone to enjoy Xbox 360,” said Microsoft SVP Don Mattrick in a statement. “Xbox 360 delivers amazing performance at an extraordinary value with the leading online service and best lineup of games, downloadable movies and TV shows available from a console.

Google Chrome – To Love, or Not to Love?

You know that technology is really ingrained into our lives when all the headlines are filled up with Google Chrome-related news. You’d almost swear it was an Apple product! Even my mom, who’s not high on tech, asked me on the day of release if I used it yet. Somehow, the browser was newsworthy enough to appear on TV news, although I’m not sure what channel that was on.

Well, are people still excited about Chrome as they were on day one? Well, it’s hard to say, and it seems to be those who are happy, still have their reserve on why not to use it. For me, it might be the privacy policies, but I’m still waiting until the final launch before those are finalized, in hopes that they change for the better. Another reason might be the fact that it already has a DoS (thanks Don for the link) vulnerability. That sure didn’t take long, but it’s unsurprising. Other browser launches have suffered similar fates.

A few others are still pumped though, and even created some ‘top lists’, like Mashable!, who found seven things to really like about Chrome. My favorite might be Lifehacker’s list of Firefox extensions that enable the best features of Chrome… in Firefox, which you can see the link and quote for below.

In way of fun statistics, yesterday, our traffic saw Chrome usage of 5.44% of all visitors, while today, (first ten hours), we saw 3.34%. Small decrease, but is is a beta after all. It’s clear that there are fans though, and people will continue to use it until it drives them nuts with a killer caveat. I’m still waiting for follow-up beta releases though. Google might be doing something great here (minus the privacy policy, of course). If you haven’t already, be sure to goggle over our in-depth first-look.

Apart from a few specific issues (namely process management), many of Chrome’s best features are already available in Firefox 3, proving yet again the power of extensibility. From incognito browsing and the streamlined download manager to URL highlighting and improved search, let’s take a look at how you can bring some of Google Chrome’s best features to Firefox.

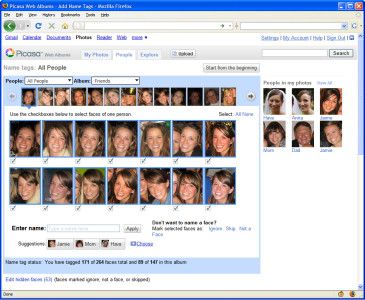

Google Adds Face Recognition to Picasa

It was bound to happen eventually, but who expected it from a completely free application? Google has gone ahead and added face recognition to their Picasa application and co-existing web service, which is sure to make handling your large collections a breeze (or so we can hope).

The web service version works by scanning your photo collection to come up with groups of photos that contain the same person. At that point, you can click all that are matches and affix a name, making them easier to search for in the future. Photos added after-the-fact can be added with the same tag, or rescanned to let Picasa find the similar faces.

To take things even further, you can also add tags for location and activities. The privacy issue really piques my interest here. Google won’t only have information of who someone is and where they are located, but they’ll now be able to see what they look like. In some regards, it’s actually kind of scary, but that’s the power that Google has. Steve Rambam said it best, ‘Privacy is Dead’.

My paranoia aside, this feature is sure to make personal collections a lot easier to manage, and I’m almost tempted to download version 3.0 of the software version once it becomes available. There are of course other new features included, so I recommend checking out the blog link below to read about them.

Tagging is a powerful way to sort digital photographs. Photo albums are useful, but with rich tagging, people also can slice and dice their photo collection to show particular people, activities, or locations. Even with face recognition technology or other computer processing, the textual tags in photos are a far more reliable way for computers to understand image content.

Google’s Chrome – Browser Done Right?

On Monday, we linked to news of a Google browser that would possibly be launched sometime in the near future. Well, ‘possibly’ is the wrong word to use, because it was released the very next day. If only all rumors solidified so fast! I admit, even while making that news post, I remained skeptical about how great their browser could actually be. There is so much competition, so how would they have a hope to even compete?

Well, now that the browser is available, I am can rightfully say that my opinion has been changed entirely. Google isn’t just releasing a browser in order to throw their badge on something… they’re actually doing things that are unique, innovative, and common sense, in order to help the web evolve. I’m not so sure how their browser will help the web evolve, but I do know they are going in the right direction.

In case you didn’t look at the top of the site today, be sure not to miss our in-depth look at the new browser. Do so even if you downloaded the browser yourself… you never know, we might just help you figure out something you didn’t even know what there! What’s most impressive to me is that despite the first release of Chrome being a beta, it’s well done, and seems entirely stable. I can’t wait to see what the follow-up releases are going to be like.

One example in particular is when I am preparing a post for our news section or our forums, and then all of Firefox goes down in one swoop, without warning and without error. To prevent this, Chrome throws each tab into it’s own instance within Windows, so if one tab crashes, it won’t effect the other tabs that are still open. When first starting Chrome up, you’ll notice that two instances are active – one for the browser, and another for the ‘speed dial’, I assume. Open up another tab, and you’ll see a third instance, and this increases as you open more tabs.

Is Vista Driving People Towards Linux?

Alright, I admit that I’m somewhat of a Linux fan, given it’s my primary OS, but I’m not about to make assumptions that Windows Vista is so bad, that it’s driving people towards it. Could I be wrong? Well, according to an author at IT Wire, it’s a definite possibility. The author explains that he himself had huge beefs with Vista, and from what I assume, he decided to stick with Linux.

For whatever reason, I actually seem to be going in a somewhat opposite direction when it comes to Vista-bashing. When the OS was first released, I had no end of Vista spite, but now that Service Pack 1 has been released, and I realized how much better the 64-bit version of the OS is, I’m starting to hate it a lot less. I’ve been using it as the primary OS on the Skulltrail machine here since earlier this year, and I really haven’t run into any show-stopping issues.

I know I’m alone, though, and not a day goes by when I don’t hear someone talking about how bad Vista is… which actually surprises me, given that the typical user tends to be a little more patient with OS annoyances than the advanced user. So is Vista actually pushing users towards Linux? I’m willing to be debated, but I’m doubtful. As is obvious from all the news posts I make about Linux, I love the OS, but it still has a way to go before people are going to begin flocking to it.

The eeePC and other notebooks are a hit because everything works. There is limited functionality but then that’s all the buyer is looking for. Nerds and geeks drool over it as they would over any gadget. Businessmen find them handy to carry from place to place – they weigh very little. Compared to something like my IBM Stink… er, ThinkPad, the eeePC is a featherweight. Even a child can carry it around – as indeed I’ve seen some children do.

AMD Doesn’t Plan on Letting NVIDIA Get Back Up

Where GPUs are concerned, times are interesting. Over the course of the past two years, NVIDIA dominated the low-end, mid-range and high-end markets, and the outlook for AMD looked grim. But lo and behold, big red had something up their sleeve and what they launched surprised even NVIDIA. That was proven by the fact that NVIDIA dropped their newly-launched GTX 280 by over $200 within the first month. Now that’s hitting someone where it hurts.

According to TG Daily, AMD has little intention to sit back and let NVIDIA redeem themselves. Their internal roadmap lays out plans to launch low-end cards from their 4000-series to compete with NVIDIA’s newly launched 9400 GT, which was launched as a result of apparent increases in demand for low-end discrete graphics – an increase that AMD claims didn’t ever exist.

Aside from the potential low-end cards, AMD is set to launch new mid-range cards next week which include the HD 4650 and HD 4670, both featuring 320 stream processors, along with 512MB and 1GB of memory, respectively. That’s all good… but I think most of us want to see what’s up NVIDIA’s sleeve next, especially after AMD’s recent HD 4870 X2 launch, which was equivalent to a full-blown whiplash for the green side.

We caught up with AMD’s Dave Baumann, who told us that AMD did not notice increasing demand in the $60 segment of graphics cards. However, he noted that demand isn’t decreasing either and has remained stable on a fairly high level. Commenting on Nvidia’s 9400 GT, Baumann said that AMD believes that this 55 nm G96 GPU may not be cheap to produce and represent a “quick-and-dirty-solution” to participate in the $60 market, which aims to provide PC users an affordable upgrade for IGC-equipped PCs.

Mythbusters Weren’t Allowed to Air RFID Segment

One video that seems to be spreading around the web like wildfire is of Adam Savage, who in a talk tells all about the Mythbusters’ inability to show their findings with RFID’s hackable on the show. The talk happened at the Hackers On Planet Earth conference that occurred this past July, which I covered here. In this video (embedded below), Adam is asked by an audience member why they haven’t tackled RFID, and it becomes obvious quick that there’s a big problem surrounding why.

As he points out, quite simply, the credit card companies stepped in, because after all, they are the leaders in RFID usage, so who can blame them? Well, if only all of us thought like that, and I’m sure by now many of us already know just how hackable RFID really is. It’s just too bad the general masses do not.

During the same talk, Adam goes on about the shows’ inability to do a segment on teeth whiteners, as they were stopped by the toothpaste companies. Since Discovery Channel is funded by commercials, to show such a segment would jeopardize their revenue. Adam stated that they found the ‘whitening strips’ to do absolutely nothing, although I have other people who tell me they do work, and they’re living proof. That one might have to be proven in your own house. Regardless, it’s not surprising that the show is shaped in such a way, but it’s still too bad.

“Oh dude, the RFID thing. I’m sorry, it’s just not going to happen. Here’s what happened, and I’m not sure how much of the story I’m allowed to tell, but I’ll tell you what I know. We were going to do RFID, on several levels, how hackable, how reliable, how trackable, et cetera, and one of our researchers contacted Texas Instruments…”

AMD to Bring Back ‘FX’ Series

Regardless of what market we are dealing with, there is always going to be a product that screams ‘ultra high-end’. It will cost a lot more than the rest, but gives certain perks that cause it to be drool-worthy for enthusiasts everywhere. One such case is with our CPUs. Intel’s Extreme Editions cost much more than the second rung in the ladder, but they are binned for perfection and stock-clocked higher than anything else.

But remember when AMD had such a series as well? With the launch of Intel’s latest Core 2 processors, AMD was whipped, and they knew it, so their ‘FX’ line was dropped. It was rather upsetting. When FX models first hit the market in 2003, they really meant something. I clearly remember the first time I read about the single-core FX-51, a 2.2GHz wonder chip that just screamed ‘take out a loan, now!’.

The last ‘real’ FX chip was the FX-60, a 2.6GHz Dual-Core offering that came out in early 2006. Since then, we saw CPUs for the Quad FX platform, but I don’t think we need to talk too much about that. For those who miss that FX moniker, no fear, as AMD plans to relaunch the series next summer. The big question though, is whether or not AMD will be able to deliver a product that actually deserves the title. We can hope…

The code-named Deneb FX microprocessors that are projected to be launched sometime in the middle of next year will feature four processing engines, shared level-three cache, dual-channel DDR2 (up to PC2-8500, 1066MHz) and DDR3 (up to PC3-10666, 1333MHz) memory controller, according to sources with knowledge of AMD’s plans. The new chips are projected to utilize AM3 form-factor, which means better system flexibility.

Atom Demand High, Intel Unable to Keep Up

One problem with a new product being so successful is that it’s sometimes difficult to keep up with the sheer demand, especially if you don’t have the factory power to back it all up. Take Nintendo’s Wii for example. Despite the fact that it was released two years ago, it’s still in short demand, and the only way to guarantee a console is by ‘knowing someone’ who works at a retailer that carries them.

Well apparently Intel is suffering a similar issue with Atom. The demand is so high, that they are simply unable to build enough, even though they yield close to 2,500 per wafer. Thank ASUS, I guess, for kicking off the netbook PC revolution, because since they released their Eee PC, at least ten other companies have followed suit with their own mini-notebooks. Slowdown? Not going to happen for a while.

What could result from Intel’s inability to keep up with demand might be extra sales for VIA, of their Nano processor. Nano has its own caveats, but when Atom supply is limited, some companies are going to have to look elsewhere. As Ars Technica mentions, HP is the first company to pick up on Nano for their own netbook, but it wouldn’t be surprising to see other companies offer special models. This could be resolved if Intel converted one of their fabs to push out Atom, but that would of course affect another product line. Tough decisions at Santa Clara, it seems. Who said success was easy?

If anyone benefits from Intel’s constraint, I’d expect it to be VIA, but I also expect Santa Clara to keep a very close watch over Atom’s growth. If the company feels that it is losing important sales due to production constraints, it might very well decide to take a (small) hit in another processor family in order to establish Atom’s presence in as many first-generation netbooks as possible.

Sandra 2009 Brings GPGPU Benchmarking to our Toolbox

SiSoftware, creators of one of the most popular benchmarks ever, have released version 2009 of Sandra. We’ve used Sandra in our reviews for as long as the site’s been around, and it’s likely easy to understand why. It offers a slew of different tests, from ones that target to the CPU to the RAM to the GPU. What? Did I just say the GPU? Why yes I did, and that happens to be one of the new features of the latest version.

As mentioned in the last news post, GPGPU is a term that really came out of nowhere, but is here to stay. Since GPUs have been found to be so highly-efficient in the general purpose game, a huge push is being put forth to shift certain applications over to those, to take advantage of the highly parallel architecture that the GPU offers. Noticing this, SiSoftware was quick to add three new tests to the latest version, all which test the GPU and spit out a result similar to what we currently see with their CPU benchmarks.

The new benchmarks are ‘Video Rendering’, ‘Graphics Processing’ and ‘Graphics Bandwidth’, all of which are self-explainable. You can see the results of the middle test below, which delivers results for both the float and double precision shaders in MPixel/s and GPixel/s, respectively. I could not immediately get the Video Rendering test to function properly, despite having a fully up-to-date system.

How useful the new benchmarks will prove to be in real-world use is unknown, but it could be just as useful as the results the CPU ones we have now. This is at least a start, and though there is not that much importance on GPGPU right now, I think we’ll see things change over the next year, when applications that take advantage of the GPU begin to hit the market, such as video renderers and image manipulators. After we evaluate the new version more, we may begin to include results in our GPU reviews, if there is some interest in such metric.

We believe the industry is seeing a shift from a model where the vast majority of workload is processed on the traditional CPU: in a wide range of applications developers are using the power of GPGPU to aid business analysis, games, graphics, and scientific applications. Coupled with the charts added to the latest version of the software, we can work out whether a CPU or GPU would be faster, more power efficient or cost efficient.

Source: Sandra 2009 Press Release, CPU vs. GPU Arithmetic, CPU vs. GPU Memory Bandwidth

What DirectX 11 Will Bring to the Table

It took a little while before DirectX 10 games hit the market, but now there is at least 20 different titles that are either native DX10 or at least support it. The most notable might be Crysis, although games like Call of Juarez, Assassin’s Creed, Gears of War (pictured below) and Lost Planet put it to the best use. The real benefits of DX10 might be seen when certain titles like Alan Wake and Crysis Warhead hit the market though… not to mention STALKER: Clear Sky. The next year in gaming is sure to be pretty…

What about DirectX 11? Microsoft formally announced the new API at their Gamefest conference in Seattle this past July, but what does it mean to developers, or gamers? According to Elite Bastards, it means a lot of things, and from what they’ve found out, Microsoft is really pushing forth an effort to make sure their upcoming API is well-received. Microsoft even goes as far as saying the best way to prepare for DX11 is to code with DX10 and 10.1, now. Not much will change from the code base scheme of things, it seems.

One of the biggest new features for DX11 might be multi-threading support, allowing developers to begin taking full advantage of multi-core CPUs (or so we can hope). Another major feature is GPGPU support, which is good given the sheer amount of effort both Intel and NVIDIA are placing on using your GPU in non-gaming situations. In short, DX11 looks promising, and it might be one of the biggest single changes our GPUs have experienced in a while, at least on the software side.

These coding changes will also be reflected in an updated version of the DirectX HLSL (High Level Shading Language) used to write shaders and DirectX code. As we can see above, it appears that the general target for DirectX 11’s Compute Shader is still going to be the manipulation of graphics and media data, which as I mentioned previously suggests it won’t quite be invading on CUDA territory this time around by providing a complete coding structure geared towards creating an application of any kind in HLSL.

Using Rsync to Reliably Backup Your Linux

Early last month, there was a slow news day, and as a result, I had to scrape the bottom of the bucket to find something to post about. Of course, the natural thing to do was pimp our old content, which I think is a great idea since there are many people who may not have seen it before, and well, even if you did see it before, you might appreciate the reminder. The last ‘article recall’ I did covered a Linux article, and well, let’s stick with the Linux theme for this one. Don’t worry, I plan to recall non-Linux content in the future as well.

If you run Linux, do you know what backup options are available to you? There’s honestly quite a few solutions out there, and I admit that I haven’t tested any, so the suggestions I have might be more difficult than what is available. In “Backing Up Your Linux”, I give the power-users solution of how to back up your rig, because when you take care and write a simple, yet effective script, you know that your system is being handled and backed up properly.

The article covers many different ways to exploit ‘rsync’, and in case you don’t have your system set up in a certain way, I make sure to cover all the details. Though you can back up to another hard drive or even an external hard drive quite simply, the article also explains how to backup to network storage (NAS box or even a Windows PC) and even backing up to a remote server, where your data will remain safe even if something horrible happens to your local backups. So if you run Linux and have enough patience to read through the article and write up some great scripts, I highly recommend you read through, and I’m not just saying that because I wrote it. I swear.

There are many different mediums that you can back up to, but we are going to take a look at the three most popular and go through the entire setup process of each: 1) Backing up to external storage; 2) Backing up to a Network-Attached-Storage (NAS) and 3) Backing up to a remote server running Linux. On this first page, we are going to delve into the wonderful tool that is rsync, and give examples for you to edit and test out for yourself.

Source: Backing Up Your Linux

Microsoft Preparing to Launch Mobile Apps Store?

It isn’t too often that Apple seems to make a poor business decision, and with the launch of their apps store, it was an incredible decision, evidenced by the fact that it pulls in around $1M in revenue every-single day. Well, Google also offers such a service, though it’s nowhere near as popular as Apple’s incarnation. It’s no surprise then, that Microsoft wants a piece of the action, and apparently they’re planning to launch their own apps store in the near future.

The service will be called ‘Skymarket’ and consist of applications suitable for the Windows Mobile platform – essentially anything that’s not an iPhone. Few details are known right now about the new service, but Microsoft is actively seeking someone to fill the position as Senior Product Manager. Though, the original listing is now gone, so the position might have been filled, or is at least close to being filled.

How successful Microsoft’s shot at a mobile store is anyone’s guess, but it’s bound to be a success with all those who are sticking with Windows Mobile as their handheld platform of choice. I don’t own an iPhone, nor have ever experienced Apple’s apps store, but I do know people who love it, and visit it daily. It seems nowadays the best kind of developer to be is a mobile developer… it’s an industry that’s certainly on a steady incline.

It appears the software giant expects to launch an applications store called “Skymarket” this fall for its Windows Mobile platform, if a recent job posting spotted by Long Zheng at Istartedsomething.com is accurate. According to the ad posted Sunday on Computerjob.com, the Skymarket senior product manager will head a team that will “drive the launch of a v1 marketplace service for Windows Mobile.”