- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Accelerating Creator Workflows With NVIDIA’s GeForce RTX

When GeForce launched 20 years ago, powering cutting-edge games was its focus. Over time, GPUs became more capable, evolving to the point where they were not just useful in creative design, but vital. Last summer, the entire creative industry got a shot in the arm with the launch of NVIDIA’s RTX family of GPUs and features. In this article, we’re going to explore why that is.

NVIDIA sponsored our time to deep-dive RTX so that we could produce this article.

When we look back on the last couple of years of articles we’ve written centered on media and entertainment, we see that changes made over time have been staggering. We’ve seen the introduction of Tensor cores and the explosion of AI, with research gained being applied to mainstream software we all use. We’ve also seen the introduction of ray tracing APIs, as well as NVIDIA’s real-time ray tracing hardware, falling into the RTX family.

At Computex 2019, we saw the introduction of NVIDIA’s Studio platform, hardware and software dedicated to creative workloads. NVIDIA Studio combines GeForce and Quadro-powered systems with the NVIDIA Studio Stack of specialized SDKs and dedicated Studio Drivers. This all goes through extensive and rigorous hardware and software testing for creative applications and workloads. There’s also NVIDIA’s RTX Studio range, which are dedicated workstations and laptops with all the real-time ray tracing, AI acceleration, and high-quality video editing features in a single package to help artists create content in record time.

We previously covered ray tracing in gaming, and the industry’s move to inch closer towards photorealism with realistic shadows, reflections, caustics, and so on. This article is concentrating on the creative aspect – the businesses and people who make the games, movies, and even products.

For nearly 10 years, NVIDIA has worked on its own programming language, CUDA, to leverage the power of its GPUs in more general-purpose applications. As this matured, and more features were added, it gave rise to new hardware acceleration to improve these workflows. Then, a few years ago, we saw the first steps of a new industry based around neural networks, machine-learning, and AI. What took everyone by surprise was the speed at which these industries took off.

In May 2017, we saw the launch of a new wave of hardware, purpose-built around AI. One such offering was NVIDIA’s Volta architecture, with the debut of the V100 GPU. This graphics chip includes AI-centered Tensor cores that have become one of the key building blocks in not just AI development and inferencing, but also NVIDIA’s RTX platform. Just one year later, in March 2018, we saw one of the most impressive technical launches for a new feature. Everyone went ray tracing crazy with the now iconic Star Wars Reflections demo, utilizing DirectX ray tracing (DXR) and NVIDIA’s RTX.

While the Star Wars demo was impressive, it did come with a steep hardware requirement: four GV100 GPUs working in unison. Little did we know at the time that dedicated hardware for ray tracing was on the way. A few months later in August, just before SIGGRAPH 2018, we had the privilege of interviewing the creator of computer-aided recursive ray tracing, J. Turner Whitted. A few days later, NVIDIA announced its very first real-time ray tracing GPUs with the Quadro RTX launch, and just a week later, we saw the GeForce RTX launch at Gamescom.

DXR, RTX, And Tensor Cores

It’s worth pointing out that ray tracing is not specific to NVIDIA with RTX; it’s part of the DirectX ray tracing API (DXR), as well as Vulkan RT. NVIDIA is one of the first to implement hardware acceleration of these ray tracing APIs with the help of its RT and Tensor cores. It’s the RT cores that allow for real-time ray tracing by doing Bounding Volumetric Hierarchy (BVH) traversal calculations.

The job of these RT cores is simple, but tedious. BVH is the equivalent of collision detection for ray traces. It figures out where in a scene a ray strikes an object. Each one of these objects has a boundary, a volume of space surrounding it that is used to roughly determine its location. When a ray of light is cast, and hits one of these boundaries, the volume shrinks and the ray continues. This is done progressively with smaller boxes, until either the ray hits the smallest boundary and a pixel value is calculated, or the ray flies past without hitting anything. The RT cores quickly figure out where light hits an object, but then passes off the collision to a shader that does the final rendering. All of this can be done with normal shaders, but it takes a very long time, and that’s the crucial element here: RT cores allow for this to be done in real-time.

As powerful as these RT cores are, they still need a little help, and that’s where the Tensor cores come in. Ray tracing is very computationally expensive, and 3D designers have used all kinds of tricks to speed up the process. One example was to limit the number of rays cast to get a rough idea of where things are, and then use a denoise filter to smooth out any rough patches.

With several years of training with deep learning and neural networks, these ray traced noise patterns can be used to help fill in the blank spots in a rendered image. The Tensor cores on RTX cards can very effectively ‘guess’ the adjacent pixels and fill in the render. It’s more than a simple post-process blur filter, as it takes into account the depth of each object, proximity to others, environmental lighting, the normal map, and so on, producing some very accurate and surprising results, very quickly. Use of these denoise filters were one of the first examples used to illustrate the Tensor cores.

RTX In Software

It didn’t take long for 3D design software to catch onto the tangible speed increases in rendering that NVIDIA’s RT cores can provide. GPU rendering has been a thing for a number of years. In the early stages, it couldn’t match the complexity in render options that CPU rendering could provide, but it didn’t take long to catch up. Even long-standing CPU only renderers like Arnold have since enabled GPU render support, retaining the full feature set.

When NVIDIA showed off what its RT and Tensor cores could do, nearly every 3D design suite started development on supporting these RTX features. It’s not just 3D work that’s on the table, but video editors, too, such as Adobe Premiere Pro. DaVinci Resolve is another notable adopter, using Tensor cores for various AI assisted effects, such as upscaling, stylizing, object detection and tracking, and various composite effects.

While not strictly related to RTX, but still immensely important and one of the big surprises of the year, NVIDIA enabled 30-bit OpenGL color support on GeForce and TITAN graphics cards, no longer confining it to its Quadro line of professional GPUs. Color grading and video editing applications from Adobe and DaVinci can now do full 10-bit per channel color authoring on mainstreaming hardware with a compatible monitor. Currently, the competition only offers this feature on its professional GPUs.

The 10-bit addition to mainstream GPUs stems from an increase in HDR content. Movies are no longer only produced by large production studios, and video games are now enabling HDR rendering, so it all seems fitting to allow content creators to make use of the extended color palette, be they professionals, streamers, or gamers.

The list of companies that have either integrated RTX support, or have it planned, is astonishing. Adobe’s Dimension, Photoshop, Lightroom, Substance, Autodesk’s Arnold, Blender, Davinci Resolve, finalRender, Dassault’s CATIA, SolidWorks Visualize, Daz 3D, Unreal Engine, Luxion’s Keyshot, Maxon’s Redshift, OTOY’s OctaneRender, RenderMan, Siemens NX RT Studio, Unity, and many others.

We can’t look at all of them, but we do have first-hand experience with some, and plenty of examples of what RTX can do in the real world, beyond gaming.

Blender 2.81 OptiX And Octane

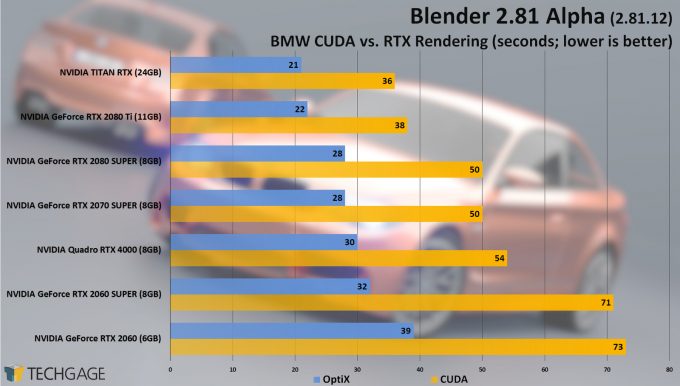

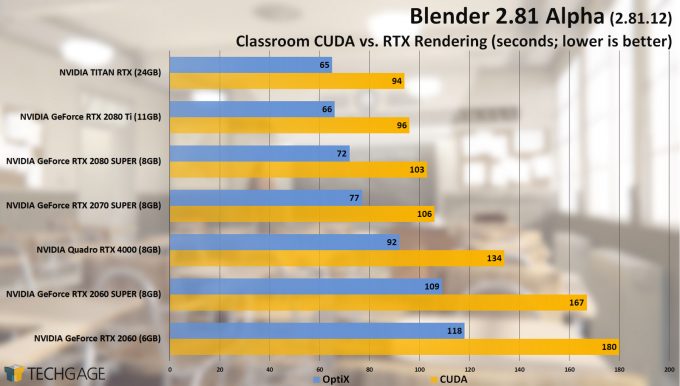

As part of the new wave of software announcements that included RTX support, Blender was high up on the list of design suites we wanted to test out. Blender 2.81 can now make use of OptiX as a rendering engine when using Cycles. While there is an AI-denoiser that’s part of OptiX, this is not included with Blender by default, however, there are plugins available that can add this, such as Remington’s D-NOISE which is available over at GitHub.

Blender’s render engine, Cycles, is actually the perfect showpiece for the benefits of what RTX can do. It’s an unbiased renderer that can use the CPU, GPU, mixed mode rendering using both CPU and GPU, and now RTX using the OptiX engine. This effectively gives us the opportunity to see how all the hardware can be used in different ways within the same application, with the same projects. A real apples-to-apples test suite.

We did some thorough testing of Blender 2.81’s alpha build that had a near-full implementation of the OptiX engine. The charts show some really impressive performance boosts from the RT cores doing some heavy lifting, cutting render times down by as much as half in some cases. You can read our full testing in the article, but keep in mind that many of the issues we encountered have since been fixed. Actual performance remains the same in the official 2.81 release, and is very impressive.

Luxion announced plans to add GPU support to its Keyshot render engine at SIGGRAPH 2019. Not only was it adding GPU support, but it went head first into RTX support too. Just last month we saw the official release of Keyshot 9, bringing with it the new rendering engine, a bunch of new features, as well as denoising and accelerated rendering using those Tensor and RT cores. We haven’t had a chance to dive into testing yet, but it’s in the works.

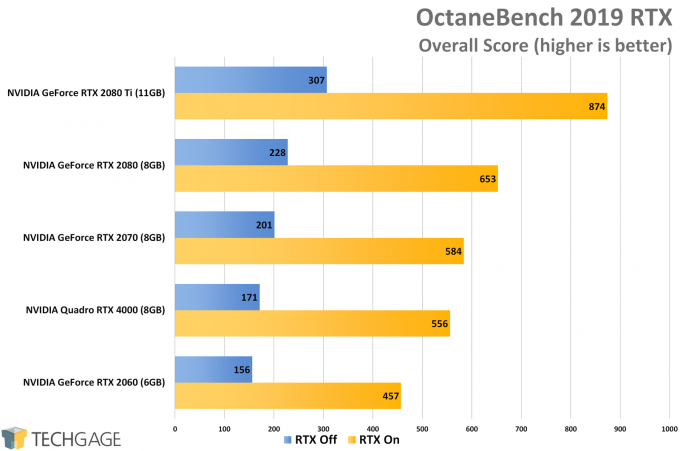

OTOY’s OctaneRender was another of the big render engines that enabled support for RTX. While only announced shortly after NVIDIA’s GTC event earlier this year, we managed to get a sneak peek of a beta version of the new OctaneBench which can test the RTX rendering features. This is the same rendering engine that will be built into the official renderer, but in a more convenient format for benchmarking. We put a bunch of GPUs through and were astounded at the difference those RT cores could make.

This chart compares the normal GPU scores with RTX turned on and off for each GPU. From the mid-range GeForce RTX 2060, up to the 2080 Ti, there was a 2.5x increase in performance averaged over three tests, for each GPU. While this is a score-based metric, real render times will be affected to at least a similar degree.

V-Ray, Adobe, And More

V-Ray is another popular rendering engine where its plugin was recently updated (as part of the 3ds Max and Maya update), enabling RTX features as part of its workflow. V-Ray is something we test frequently here, and we plan on doing a deep dive into the performance benefits later. Same can be said for Maxon’s Redshift. Autodesk’s Maya 3D design suite and Arnold Renderer have now enabled RTX features for rapid rendering and AI denoising

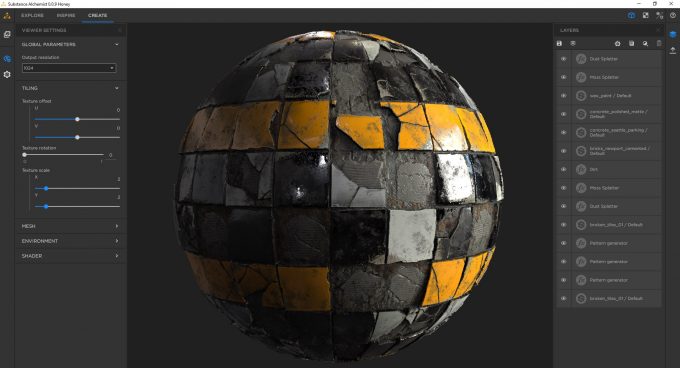

Adobe has its own 3D applications like Dimension and Substance using RTX features. Dimension allows for interactive editing of full renders, changing colors and lighting within a scene, accelerated with RTX hardware. Substance has multiple applications under its suite. Alchemist makes use of the Tensor cores to break 2D images down and convert them to 3D textures. This process uses the Tensor cores and AI to quickly mask areas of interest automatically, and adjust lighting effects in select areas. This potentially saves hours of masking and editing time for the artist. Substance Painter and Designer make use of RTX to accelerate map baking (saving high poly-count 3D geometry as a texture), and you don’t need a Turing GPU for this, since GeForce 10-series GPUs can speed up this process using DXR.

DaVinci Resolve’s Neural Engine makes use of NVIDIA AI libraries and Tensor cores for things such as speed warp, which is used to interpolate new frames when slowing down footage. There is Super Scaling for upscaling low resolution footage to 4K without making everything seem blurry (a feature used in games as well, as part of DLSS). There are various automated color matching and stylizing effects that takes a lot of the hard work out of manual adjustments. There is even facial recognition that can be used to automate tagging, or track characters in a composition.

All of these AI and ray tracing benefits come in addition to NVIDIA’s widely supported CUDA language that countless applications use, plus the NVENC dedicated video encoding and decoding hardware capabilities. This is all on GeForce hardware, and not just professional series graphics cards, like the Quadro RTX GPUs.

Now And The Future Of RTX

This last year has been surprising on many levels with just how far NVIDIA’s RTX platform has come. Even the last few months alone have seen announcement after announcement centered on new and exciting ways the creative industry has not just latched onto, but embraced RTX. The biggest surprise isn’t just the 2-3x performance increases we’re seeing in applications, or the ingenuity behind the AI research – it’s the sheer scale of the industry’s support.

These ray tracing and AI inferencing engines are not restricted to NVIDIA’s Turing architecture. Blender will work great with any GPU, but the addition of those RT cores can really speed up the process. Adobe has experimented with AI in its applications in the background for a few years, the Tensor cores on Volta and Turing cards just speed up the process.

In an industry where time is money, every single advantage that can be leveraged, should be utilized. Real-time interactive renders of ray traced scenes with AI-assisted denoising allows creatives to not just rapidly change and adapt projects, but actually experiment with new ideas and methods without feeling guilty about wasting an hour on a bad render with old hardware.

With all that we’ve seen this last year, and the support the industry has shown, we can’t wait to see what next year, and the next decade bring.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!