- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

ATI’s Graphics Card Drivers Need Serious Love

If there’s one thing I realize well, it’s that from time to time, people can run into a string of bad luck. The past few weeks have proved that fact to me, where computers are concerned at least, and I’m sure I’ll divulge more into that at some point. As I mentioned earlier this month, we have some great content coming up, including our review of NVIDIA’s GTX 285 and GTX 295, which should have been posted last week. I won’t get into all of the reasons for the slow posting here, but I will just say that a) manually re-benchmarking a slew of graphics cards takes a while and b) things that can go wrong, sometimes do.

I’ll pick on just one thing here though, because when I think back to all of this re-benchmarking I’ve been doing, there’s been one problem to top them all… ATI’s GPU drivers. Now, I try to keep an open mind when it comes to issues with hardware, and it’s important to, to help avoid feeling biased. There’s something about ATI cards that just works against me though, and that was well-evidenced over the course of the past few days.

As mentioned above, I’m in the process of re-benchmarking numerous cards since we are shifting our previous GPU testing machine to be based on the Core i7 platform. Well, I spent almost an entire week on NVIDIA cards, and so it the time was right to move over to ATI’s cards, but problems ensued. Now, I’ll preface this by saying that I’ve had many problems with ATI’s drivers in the past, but that’s not the problem. The problem is that I’m still running into these exact-same issues, and I can’t for the life of me understand why.

To keep an already long story shorter, I’ll say that the main issue that plagues ATI cards is the bug where installing the driver will do nothing. When I reviewed ATI’s HD 4870 X2 last summer, the issue that crept up was a driver that never actually “stuck”. At its worst, I had to re-install the driver four times before it would finally work. Most times, I’d install the driver, only to reboot and get an error about an appropriate card not being found. Frustrating, to say the least.

Since that time, I really haven’t had any major issues, but I did have some slight ones when I sat down to benchmark Sapphire’s HD 4850 X2. In that particular scenario, I installed both the 8.12 and 8.12 Hotfix drivers off of the official site, and neither worked. At least, not at first. After installing the hotfixed drivers for the second time, the card was operable.

This past weekend, I had similar troubles. After installing the HD 4870 X2, it took four driver installs (between 8.11 and the latest hotfixed driver) before the card worked, and on the HD 4850 X2 side, it took one re-install for it to function. At first, I thought it was just issues with dual-GPU cards, but I had the same issue with the 1GB HD 4870, where I had to re-install the driver once to have it function. To add to the pain, Mirror’s Edge didn’t bode well with any ATI card, and at first, I was stumped. The game would run, but at an obscenely-low average FPS (think 2). The problem? ATI’s “Catalyst AI” was enabled (which is default). After I disabled it, the game ran fine.

Let me get one thing straight. I love ATI, and for the most part, I grew up on ATI cards. I loved seeing them strike back against NVIDIA last summer with their HD 4000 series… and who wouldn’t? One decision that always bothered me though, was their needlessly bulky drivers, based on none other than .NET code. I’m not sure if the issues I ran into are related to that, but I’m sure it doesn’t help. I’m assuming that I’m not the only one who’s ever experienced these issues, because I’ve run into these on two completely different platforms with completely different GPU configurations.

I’ll leave NVIDIA’s drivers out of this, but while they are not perfect either, I haven’t experienced a major issue like this since at least the Vista launch. ATI really, really has to get the driver situation sorted out, because as I said, there’s no way I’m the only frustrated user. The Mirror’s Edge bug really stumps me, because as it seems, anyone who picks up this game on an ATI card might run into an issue, and not too many regular consumers are going to know how to tackle it. So am I alone here? Let us know in the discussion thread.

Discuss: Discussion Thread

Intel’s Craig Barrett to Retire in May

Intel on Friday announced that Craig Barrett will retire in May from both his role as Chairman of the Board and also from the Board of Directors. Craig became the Chairman in May 2005 after leaving his CEO position, which is now in Paul Otellini’s hands. His CEO position lasted between 1998 – 2005, and if you think he’s simply a CEO to fell into his position, you’d be wrong. Craig has been with Intel since 1974, equating to a staggering 35 years with the company.

In the press release, Barrett noted that he was fortunate to work with such industry legends as Gordon Moore, Bob Noyce and Andy Grove, the former two who founded the company in 1968. From a geek’s perspective, that thought sounds like a dream. Craig has been around since the introduction of Intel’s simplest microprocessors. In 1974, the new-fangled processor at the time would have been the Intel 4040, a 0.5KHz chip that no doubt screamed through all the latest benchmarks at the time.

Craig will be replaced by Jane Shaw, who first joined Intel’s board in 1993 and previously worked as Chairman and CEO of a pharmaceutical company. From an end-user perspective, we never heard much from Craig to begin with, but his expertise and knowledge cannot be denied… that simply comes with working in such a technologically-advanced company for 35 years. I can’t help but imagine how cool it would have been to join such a company in 1974. Technology is exciting today, but things were so different then. It’s easy to take such things for granted today, that’s for sure. Good luck, Craig.

“Intel became the world’s largest and most successful semiconductor company in 1992 and has maintained that position ever since,” said Barrett. “I’m extremely proud to have helped achieve that accomplishment and to have the honor of working with tens of thousands of Intel employees who every day put their talents to use to make Intel one of the premier technology companies in the world. I have every confidence that Intel will continue this leadership under the direction of Paul Otellini and his management team.”

Apple’s DRM-Free, But Where’s Downloadable Music for Audiophiles?

It may be difficult to believe, but despite the fact that iTunes is up to version 8, and has been out since 2001, I purchased my first track from the service just this past weekend. What took me so long? DRM too me so long. As soon as I heard the wretched tech was gone, I decided to install the application and see what the service was like. So far, I’ve purchased only a single song, but I’m definitely keeping the service in mind for whenever I need a track that I can’t get anywhere else.

My predicament is this. I’d use iTunes a lot more often if they offered music in some lossless audio codec. As far as I’m aware, that option just simply doesn’t currently exist, anywhere. I admit, the 256Kbit/s audio that iTunes offers isn’t horrible, and it used to be far worse, but if you have a pair of multi-hundred dollar headphones (or speakers) and an expensive audio card, the desire for audiophile-geared bitrates becomes more than a simple desire. It becomes a requirement, and if a service doesn’t offer it, there’s a serious lack of ambition to go back.

I’m willing to sway a bit if I need a single track and don’t feel like importing a disc for it, which was the result of the purchased track over the weekend, and while I’m still one of those people who loves running out to the music store to pick up a CD and then back home to rip it, I’d seriously consider buying most of my music from a downloadable service that offered lossless audio. We’re at a time when both bandwidth and storage is inexpensive, and I have little doubt that most people who want lossless audio would object to paying a little more for it, because after all, bandwidth isn’t free, and there should very-well be a premium.

Using iTunes this weekend did little more than fill up the dream I have of being offered lossless audio for download. The application has a great interface, and I couldn’t believe it had the exact version of some semi-obscure track I wanted, so all I can hope is that some day, either iTunes or some other comparable service will begin catering to those who lust for lossless audio. I know I’m not the only one who wants this, so let’s see it happen… from anyone.

Discuss: Discussion Thread

Can ACard’s DDR2 RAM Disk Beat an SSD?

Fast storage, can we ever get enough? With the introduction of the solid-state disk, it’s become so clear to us all just how much of a bottleneck traditional mechanic storage has become. Our CPUs are getting faster, but with even faster storage, we could see substantial gains in certain areas that would make it feel like we doubled the speed of our machine. I for one cannot wait for SSDs to come down in price even further and increase in density. When we all have one in our own machine, it will be a good day.

But with SSD hogging the limelight lately, what about other solutions? Remember the Gigabyte i-RAM? Essentially, it was also a solid-state disk, but much larger than what we are used to today. ACard is one company that didn’t want the idea to die though, as they’ve released a similar part that takes advantage of DDR2, up to 64GB worth (via 8GB sticks).

Our friends at Tech Report have a look at the new device, and to say the results are drool-worthy would be a slight understatement. In many tests, it actually out-performs Intel’s ultra-fast X25-E, but, it does cost more, so in some regards, it scales. The other downside is the density. DDR2 might be inexpensive now, but the device can only fit 16GB worth of 2GB modules, and going higher really isn’t worth it since higher-density modules escalate in price fast. Still, this is a great idea and it’s cool to see another option on the market for those who are interested.

Another benefit DRAM has over flash memory is that there’s no limit on the number of write-erase cycles it can endure. Effective wear leveling algorithms and single-level cell memory can greatly improve the lifespan of a flash drive, but they just prolong the inevitable. DRAM’s resiliency does come with a cost, though. While flash memory cells retain their data when the power is cut, DRAM is volatile, so it does not. To keep DRAM data intact, you have to keep the chips juiced.

Could Netbooks Curb PC Innovation?

That’s the big question, and it’s a good one brought up by VoodooPC founder Rahul Sood over at his blog. His thoughts are that with the advent and development of netbooks, PC innovation could be curbed, and for the most part, I can agree with him on most points. An interesting point brought up that I didn’t think much about before, is that it can prove difficult today for someone to tell the difference between a netbook and a notebook. It’s easier for those of us who know, but how would a regular consumer know?

At CES a few weeks ago, AMD showed me an upcoming HP notebook, one that I was so impressed with, I awarded it one of our Best of CES 2009 awards. It includes AMD’s latest Atom competitor, Athlon Neo, so in some regards, you might consider it a netbook right off the bat. But, it isn’t, because it features a 12″ form-factor, and that likely disqualifies it from the netbook category immediately.

So when a notebook comes in that size, features almost everything a regular notebook does in terms of connectivity, how exactly does a consumer tell the difference? I can state that for the most part, I enjoy netbooks to some small extent, but I’d easily pay two or three times as much for a 10″ notebook if it could offer me the power of a real notebook (with a real full-blown CPU). But, that’s the problem… these netbooks and other variants may throttle growth in the regular notebook market, and it’s easy to see why.

Rahul brings up some other good points as well, so it’s well worth a read.

Netbooks are everywhere. It’s amazing that companies are all launching these tiny platforms and just as quickly they’re busy slashing prices in order to stay ahead of the next guy. In some ways Netbooks helped generate sales in a tough economy – but as the core of the Netbook platform morphs into other devices we may find ourselves in an increasingly challenging situation.

FPS PC Games That Shouldn’t Be Missed

Hardcore PC gamer? Then chances are good you enjoy a good first-person shooter from time to time. Well, being a PC gamer, it’s probably hard to not enjoy this genre, because let’s face it, it seems to be the de facto genre that people think of most when picturing PC gaming in general. Not to mention, FPS games are huge at LAN’s, and why not? They’re great!

But, have you possibly missed some of the greats along the years? TechSpot might have you covered, with an article that takes a look at twenty-one FPS games “you shouldn’t have missed”. The best part is, this is a really solid list, and although you’ve likely played many of these, the author didn’t skip out on some of the lesser-appreciated titles that were very cool in their own way, like Shogo, for example.

The list includes many influential titles that really shouldn’t be missed if you take PC gaming seriously. Aside from the obvious Doom and Quake, Duke Nukem 3D and System Shock are also mentioned. I recently downloaded and played straight through DN3D on the Xbox Live Arcade, and I can honestly say, that game was just as fun now, as it was back in 1996. But I digress. If you are feeling a bit nostalgic and want to kick back with a classic title rather than the latest hit for a few nights, check the list out.

During the late 1990s it could be said that there were maybe just a few too many shooters hitting the scene and after a strong year in ’99 for action/multiplayer shooters, 2000 came with a dry spell as far as blockbusters were concerned. The Operative: No One Lives Forever (NOLF) gave the genre a much needed breathe of life. The game was presented with an Austin Powers meets James Bond feel, that provided an amusing dialogue and story.

Intel Closes Down Some Facilities, Affects Up to 6,000 Employees

There haven’t been too many companies unaffected by the state of the economy, and we can now add Intel to that list. In a release issued yesterday, the company announced plans to consolidate manufacturing operations, which includes closing down of some factories, all while leaving the deployment of 45nm and 32nm parts alone.

Two assembly test facilities to be shut down include those in Penang, Malaysia, and also Cavite, Philippines. Fab 20, an older 200mm wafer fabrication facility in Hillsboro, Oregon, will not be shut down, but will have production halted. In addition, it looks as though production will end entirely at D2, a fab located in the same city as Intel’s HQ, Santa Clara, California.

These major changes are expected to affect between 5,000 and 6,000 employees worldwide, but Intel is quick to state that not all of those will leave Intel entirely. Rather, they may be offered positions in other facilities. There’s surely not going to be 6,000 positions open up elsewhere, however, so many employees are no doubt going to have to battle with finding a job in this tight economy. That cannot be a fun experience, so we wish them luck.

The actions at the four sites, when combined with associated support functions, are expected to affect between 5,000 and 6,000 employees worldwide. However, not all employees will leave Intel; some may be offered positions at other facilities. The actions will take place between now and the end of 2009.

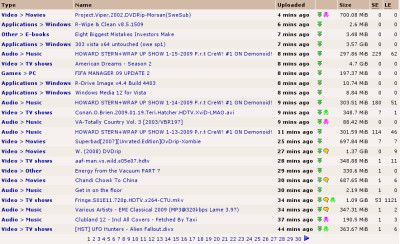

Judge: 10,000 Illegal Downloads != 10,000 Lost Sales

When it comes to technology, I don’t often agree with many judges, but this one I do. Judge James P. Jones gave his opinion in a recent P2P-related lawsuit, and came to the simple conclusion that a downloaded file doesn’t necessarily equate to a lost sale, which is a mindset I’ve had for quite a while. The reasons are simple. I’ve dealt with enough “pirates” through the years, and most of these people are those who wouldn’t pay for a game if it was a single dollar. It’s a mindset issue… free is free.

I’m not at all condoning that this is the right mindset to have, because I feel that if you want something, you should pay for it. But this is reality. The hardware side of things is a little more safe, because it’s very difficult to pirate a piece of hardware, and when it comes down to it, why would you want to? Media is unbelievably easy to pirate, so people take advantage of it.

To me, it’s a simple theory. There’s no way that if 10,000 people download a file, they would pay for it if it was unavailable online. Given the fact that it’s very easy to pirate anything nowadays, people download things they don’t even care for, just because they can. It’s like going to the supermarket and picking up fruitcake just because it’s on sale. It’s obviously never going to be eaten, but the allure of it sitting there for such a good price is too good to pass up.

This has turned into a rant post, but there is a lot more to the story than what I have to say, so check out the article below and get a bit more information and all the stats you need to come to your own conclusions.

“Those who download movies and music for free would not necessarily purchase those movies and music at the full purchase price,” Jones wrote. “[A]lthough it is true that someone who copies a digital version of a sound recording has little incentive to purchase the recording through legitimate means, it does not necessarily follow that the downloader would have made a legitimate purchase if the recording had not been available for free.”

Intel Drops Prices on Core 2 Quads, Introduces Low-Power Variants

Price cuts on popular Intel processors have been rumored for a while, but it was all made official on Sunday, with the updated prices being reflected in their processor pricing list. The five current Core 2 Quad models have been affected, with three new low-power versions being quietly introduced. You’ll be able to spot one of these models by the ‘s’ added to the end of their name.

The biggest drop was seen on the 3.0GHz Q9650, which went from $530 to $316. The Q9550 is right behind that model, now sitting at $266. Moving downwards toward models that feature less cache, the Q9400 now sits at $213, while the Q8200 saw a small drop to $163. The Q9300, for whatever reason, didn’t see a price drop at all, and now sits above the faster Q9400 where pricing is concerned.

| Model | Clock | Cache | TDP | $/1,000 |

| Core 2 Quad Q9650 | 3.00GHz | 12MB | 95W | $316 |

| Core 2 Quad Q9550s | 2.83GHz | 12MB | 65W | $369 |

| Core 2 Quad Q9550 | 2.83GHz | 12MB | 95W | $266 |

| Core 2 Quad Q9400s | 2.66GHz | 6MB | 65W | $320 |

| Core 2 Quad Q9400 | 2.66GHz | 6MB | 95W | $213 |

| Core 2 Quad Q9300 | 2.50GHz | 6MB | 95W | $266 |

| Core 2 Quad Q8300 | 2.50GHz | 4MB | 95W | $183 |

| Core 2 Quad Q8200s | 2.33GHz | 4MB | 65W | $245 |

| Core 2 Quad Q8200 | 2.33GHz | 4MB | 95W | $163 |

The three new lower-power models include the 2.83GHz Q9550s, the 2.66GHz Q9400s and also the 2.33GHz Q8200. Each of these include a reduced TDP of 65W (from 95W), and carry a noticeable price premium. The Q9550s, for example, costs $103 more than the non-s version, while the Q8200s costs $82 more than the original.

Those are not small differences, so these models will appeal to a very specific crowd. The power-savings will be nice, but I’m not sure how that will negate the much-higher cost-of-entry for some people. One potential benefit is better overclocking-ability, and of course, lower temperatures. These two factors are something we’ll be taking a look at in the coming week or two, so stay tuned.

Mirror’s Edge Offers Unique Gaming Experience

We’ve talked a little bit about Mirror’s Edge in our news in the past, but it wasn’t until the other night that I was finally able to sit down and see what the game was all about. Well, actually, it was more like sitting down and finding a level we could use for benchmarking, because that was the ultimate goal. But I did manage to see what the game was all about.

Mirror’s Edge, for the unaware, is a first-person adventure game that might look like a shooter, but isn’t. In fact, this game seems to have a much higher focus on puzzle-solving, or simply finding your way through a level, than getting rid of people who get in your way. The gameplay is based around parkour, a cool sport that really tests ones own abilities. The difference in Mirror’s Edge, though, is that you can practice it from the safety of your own home!

I wasn’t sure what to think when jumping (no pun) into the game for the first time, but I have to say, I’m actually quite impressed. First, the game is quite fluid. Before you know it, you’ll be hopping over fences, running up walls, jumping from pipe to pipe, like it’s nothing. That might sound a little bit boring, but it’s surprisingly a great deal of fun. In this regard, the game is a lot more “free” than other first person games.

Picture for example, in Half-Life 2, seeing a tall building and knowing you can get up there without the noclip cheat code. In Mirror’s Edge, there’s a good chance that you can get on most buildings. You just need to know how to properly scale them, and that’s half of the fun. Finding yourself on top of a building two minutes after being on the road is simply cool, and the style of gameplay is definitely refreshing.

When all said and done, I’ve only played the game through bits and pieces of a few different levels, but so far I’m enjoying the game enough to want to dedicate time sometime soon to actually play through it. From what I read around the web, it can be quite frustrating at some points, so I’m sure that’s something that would bug me as well, but the gameplay is just too unique to ignore the game for. If I do manage to dedicate time soon to playing it, I’ll post more about it. I’d also like to take a look at things from a PhysX perspective as well, since this is the game NVIDIA is pushing so hard around that technology.

OLED is All Over, Even on OCZ Keyboards

“OLED” has been a term thrown around quite a bit in the past year or two, partly in thanks to the Optimus Maximus, but as time passes on, prices for the very cool technology continue to plummet, so it’s a sure thing that we’ll be seeing more affordable uses for it in the near-future. While the Optimus Maximus might have been the first keyboard to utilize OLED to a great extent, the end-cost was well over $1,000. OCZ is taking a more modest approach, as you can see below.

Rather than coat the entire surface with OLED-based keys, they’re sticking to a simpler solution. Nine keys in total can be found on the left-side of this keyboard, which I can’t recall the name of, and each can be programmed to your liking. I’m uncertain of whether or not the keys will remain monochrome as seen in the images, but chances are they will, as I’m pretty sure the company would have shown off the capability.

Despite the lack of color though, OLED keys should be a feature appreciated by many. Having the ability to swap an image on a dime for something else is great. It’s much more difficult to do that with a normal key. Although the software itself wasn’t on display, we can assume that you’d be able to create your own images and functions, since that’s half the point. The pictures don’t do this keyboard justice though… the keys looked much better in person.

Although Hypersonic (an OCZ subsidiary PC boutique company) has a logo on this particular keyboard, OCZ mentioned that the retail product would have only their branding. This does suggest that Hypersonic’s PCs will include the option for this keyboard, though. In addition to the cool OLED keys, the keyboard will also feature 128MB of on-board flash memory, which may prove useful if you want to haul your keyboard over to a friends house. That may not happen often, but given the higher price of the keyboard, any additional features like that are welcomed.

Indie 103.1 FM Goes Online Only to Retain Station’s Spirit

Ugh, what’s happening to radio? There’s been a lot of doubt hovering around radio broadcasts for a while, but the truth is, they seem to be just as alive as they ever were, even with the advent of the Internet and streaming services. Many people love driving to work with the radio playing, and others refuse to dial-in to the Internet to listen to a “fake” radio station. Well, it seems one of the best radio stations out there is changing things up, and it doesn’t look good on radio as a whole.

Indie 103.1 FM, a popular Los Angeles radio station, has decided that they’ve had enough with recent changes in the radio industry, and how stations are measured. They’re tired of feeling the pressure to play “too much Britney, Puffy and alternative music that is neither new nor cutting edge“. Amen to that. Indie 103 is one of the few radio stations I listen to on a regular basis (via streaming, I live nowhere near LA), simply because of that. They play music you wouldn’t hear on mainstream radio, and that’s why myself, and many others, found ourselves attracted to it.

The station doesn’t care about how large an artist is, or what part of the world they’re from… it just has to be good music. They’ll play mainstream music as well, but again, it has to be good, and I respect that thinking. Luckily for fans though, they’re not going away completely, but have rather shifted their broadcast into an online-only format. Hopefully this will be temporary, and the industry can improve enough to revert the stance, but that’s unlikely. This is the music industry we’re talking about.

Indie 103.1 will cease broadcasting over this frequency effective immediately. Because of changes in the radio industry and the way radio audiences are measured, stations in this market are being forced to play too much Britney, Puffy and alternative music that is neither new nor cutting edge. Due to these challenges, Indie 103.1 was recently faced with only one option – to play the corporate radio game.

Gigabyte’s MA79GX-DS4H Helps Phenom II Reach 6.8GHz

Yesterday, I posted about a new high-end motherboard that eVGA were showing off, but aside from them, it was only Gigabyte who was actually pushing the kind of hardware that we were personally looking for. Motherboards, and lots of them. In particular, they had a keen interest in showing off their latest AMD-based boards, to coincide with the launch of Phenom II.

To help prove how much gusto their boards have, they and a few other co-sponsors held an overclocking event during CES to showcase their overclocking-ability. On-hand were members of the Xtremesystems.org forum, including site-owner Fugger. Their goal was to push the Phenom II X4 940 as far as humanly possible, with their ultimate goal being 7.0GHz.

In the end, they were unable to hit that clock, but they still hit an unbelievable 6.8GHz, which for a 3.0GHz processor, is a 225% overclock. Just how stable was it? Well, you can pretty much guess that one, but it was stable enough to pass through a few benchmarks with relative ease. Although Intel CPUs were also being overclocked, all the focus was on AMD’s latest product.

To help with those overclocks, the board used was Gigabyte’s MA79GX-DS4H, but the company also took advantage of releasing the successor at the show, the MA790GP-DS4H. We have just received the latter, and I look forward to taking a hard look at it with our Phenom II X4 940. Given I don’t have liquid nitrogen hanging around the lab, I don’t expect any 6.8GHz overclocks, but anywhere in the 4.0GHz region would make me more than happy.

Aside from the overclocking event, Gigabyte was showing off even more motherboards in their Venetian suite, including two new X58 motherboards that cater to those who want Core i7, but want to spend as little as possible. Both the EX58-UD4P and EX58-UD3R boards are going to be priced at around $200, which is much lower than most of the competition. The closest competitor is MSI with their X58 Platinum, which is a board we’ll actually be taking a look at shortly.

Despite carrying its “budget” price ($200 is still not cheap, but it’s a lot better than most), the new boards both feature Gigabyte’s Ultra Durable 3 features, and from the looks of things, they’re not lacking much. The UD4P board features a 12+2+2 power phase solution, on-board TPM and many other features, while the UD3R will be scaled down a little bit. It will lack the capability for SLI, and chances are it will retail for a bit less than the UD4P board. We’re looking to get one of these boards in soon, so stay tuned for our in-depth analysis.

eVGA’s Upcoming X58 Board Promises to Scream “High-End”

One cool surprise at CES that I didn’t expect to see was eVGA’s upcoming high-end X58 motherboard, one that might do well to compete with the likes of ASUS, Gigabyte and others in that arena. Although I don’t recall the name of this board (there might not have been one), it’s designed for hardcore gamers, enthusiasts and overclockers, plain and simple.

The first thing you might notice is the superb color scheme. It doesn’t feature a hundred different colors, but two… black and red. Small aesthetic touches have been taken as well, such as black metal being used where able. Apparently, it’s really complicated to use black metal for the back I/O ports, so sadly, they remain stock.

This board was built for high-end gamers, and the layout of the PCI-E slots prove it. With this configuration, someone would be able to go with tri-SLI, with say, NVIDIA’s new GTX 285’s, and then still have a PCI-E slot free for use with a smaller GPU, such as an 8800 GT, for dedicated PhysX use. This is a great ability, but it goes without saying that you’ll want some superb airflow in your chassis.

Other features include a very robust heatsink on both the northbridge and PWM area, eight-phase power solution, included high-end audio (X-Fi, but unsure if it includes the real chip), two SLI bridges (depending on what configuration you are going with), and also an external piece of PCB that contains power buttons and other various switches, including a BIOS status LED. This doesn’t have to be used with a simple GPU solution, but if you plan on topping the board out, like it suggests, this add-on will assure you’ll lose none of the functionality at the bottom of the board.

This board is set to be launched within the next few months and will retail for around ~$450. It’s pricey, so it won’t be for everyone, but it’s great to see eVGA pushing their motherboard development so hard. They admit that their launch X58 board was a little rushed out the door due to time-constraints, but they’ve really spent some hard time with the design of this one. I can’t wait to see what it can muster – besides a very, very warm room, of course.

Steve Jobs Takes Leave of Absence Until End of June

There’s been a rather large cloud of doubt hovering over fans of Apple for quite a while now, relating to the health of the company’s creative CEO, Steve Jobs. Last summer, we found out that Jobs mentioned to a reporter that he was fine, and then just a few weeks ago, he sent out a revealing e-mail to his company and press saying that he has indeed had rough health issues, but the worst was over.

Yesterday, though, Steve e-mailed his team once again and stated that his condition has turned out to be a little more “complex” than originally believed, and to help focus on it, he’ll be taking a leave of absence from the company until the end of June. It goes without saying that this is a smart move, because health should always come before work.

In the interim, Tim Cook, Apple’s COO, will become responsible for the company’s day-to-day operations, although Steve will still be working close with executives on the important decisions. Apple’s stock fell sharply as a result of the news, but it’s probably obvious that Apple isn’t simply going to die because the CEO’s taking a break. Get well soon, Steve.

I am sure all of you saw my letter last week sharing something very personal with the Apple community. Unfortunately, the curiosity over my personal health continues to be a distraction not only for me and my family, but everyone else at Apple as well. In addition, during the past week I have learned that my health-related issues are more complex than I originally thought.

Content You Can Expect to See Soon

If you happen to be asking yourself why there seems to be a lack of updates on the site over the course of the past week, don’t fret – we have lots of good stuff en route. Each year at CES, we have great intentions to post a fair amount of coverage, but like Vegas itself, CES is sneaky. You think you have a good hold on things, but you don’t. Our time at CES was hogged by the event itself and events related to it, and that resulted in a lack of content, and less than six hours of sleep each night.

That aside though, despite not having as much CES-related coverage during the show as we would have liked, Greg and I have tabulated our results for products that well-deserve our “Best of CES 2009” award. These will be awarded to products that were not just “cool”, but were good enough to remain in our minds after we left the show floor. That article will be posted soon, so stay tuned.

Regarding our coverage on NVIDIA’s latest GPUs, due to certain complications that occurred with our testing prior to my leaving for CES, I was unable to have the article posted in time for their embargo lift. We’re starting fresh on that testing though, and at the same time, we’re retiring our Core 2 machine, which we’ve used for GPU reviews for a while, and moving up to a Core i7 platform, using Intel’s i7-965 Extreme Edition and ASUS’ Rampage II Extreme.

So that said, reviews for both the GeForce GTX 295 and GTX 285 will be posted next week, and soon after that, we’ll have an in-depth article posted taking a hard look at NVIDIA’s GeForce 3D Vision, which we posted a preview for last week. The kit’s been here for a few weeks, and I’ve been dying to tear it open. After taking a look at the demos at CES, I think I might very-well have good reason for the excitement, and the same goes for you guys.

Stay tuned, we’ll have lots of updates coming up soon, now that the post-CES syndrome is calming down a bit!

IRS Urged To Levy Taxes On Economies of Virtual Worlds

One aspect of real-world societies that translates extremely well into the virtual world is economics, with many MMOs boatsting thriving economies where players buy, sell, barter, and trade with one another, in a currency with a specific denomination and uniform value.

Ars Technica reports that with games like World of Warcraft, Second Life, and even Ultima Online showing continued strong popularity among PC gamers, the IRS has begun to look at the feasibility of taxing in-game transactions between players, consisting of weapons, trinkets, character adornments/costumes and other items of various in-game usefulness (which, in the case of Second Life, includes sex toys). According to a report published by Taxpayer Advocate Nina Olson on the IRS’ web site, virtual online economies are an “emerging area of noncompliance” that the IRS should “proactively address”. Tax policy currently covers the exchange of real (the operative word here) money for the virtual currency of a particular in-game world, but Olson’s report cites the burden of tracking and reconstructing a paper trail for these transactions as the primary difficulty in “help[ing] taxpayers comply with their tax obligations”.

The collective response from a number of commentators is, “Oh, is that the best you can do?” And indeed, we can think of a number of other ‘difficulties’ the IRS may run into, such as what to do with all that virtual money, which is valueless unless converted back into a real-world currency. Perhaps the U.S. government plans to spend the additional virtual revenue on funding its cyber-counterterrorism efforts? And what about the small matter of ‘taxation without representation’ — will Mulgore finally get a seat in the U.S. senate? There are much less far-fetched ways that the IRS can benefit from the growth of in-game economies than actually attempting to tax in-game trade, prizes, and winnings, such as simply taxing the conversion of real-world currency to game currency, though even this seems tantamount to taxing the tokens won at Chuck E. Cheese (which can also be exchanged for ‘goods’, mind you).

This isn’t the first time the concept of taxing virtual worlds has come up. Since at least 2003, people both on- and offline started looking at the tax implications of virtual economies, and Dan Miller, senior economist for the congressional Joint Economic Committee, started entertaining the idea of taxing MMORPGs in 2006 after diving into the world of online gaming himself.

As Olivia Newton-John Sang, “Let’s Get Physical”

In a time when nearly everyone is moving to a means of digital distribution, Sansa is returning to the idea that selling music in bulk, in a physical form, is the way to go. The songs will be taken from Billboard lists, and currently number around one thousand tracks, but I wouldn’t expect the playlist to remain so small for very long, since the memory manufacturer is also revealing their new 16GB Mobile Ultra MicroSDHC card this month.

And to play this preloaded music? SanDisk would suggest the Sansa slotRadio that was announced last fall and left everyone speechless, but in a bad way. This little music player was most likely announced last fall with the full knowledge that SanDisk would announce preloaded music cards at this year’s CES, but the timing of the announcements still seems quite odd. If you’re in the market for an MP3 player now, and you aren’t completely sold on the idea of these preloaded music cards for $39.99, but you feel you might want to give them a try later, you might want to consider a Sansa Fuze, which is a currently-available player that is capable of playing these preloaded cards.

Oh, and lest we forget: http://www.youtube.com/watch?v=VQXECBdPgEA

LAS VEGAS—When SanDisk announced its new Sansa slotMusic player last fall, the reaction from the media was lukewarm – why was SanDisk introducing a physical format-based player in the digital file era? A move to microSD cards preloaded with music seems to make more sense…

ASUS Pushes for Multi-Touch and Ultimate Wireless Capabilities

Greg and I are sitting in on an ASUS event where the company is showing-off many cool products. Multi-Touch technology is something that’s being pushed hard, and we’re going to be seeing ASUS deploy implementation into a wide-variety of products. Eee PCs will feature it, and so will some of ASUS’ regular notebooks. On the M50, for example, there isn’t a typical touch-pad, but rather a touch-screen that doubles for both tasks.

Then we have an Eee-based keyboard media center PC, that includes a full computer inside of a regular-sized keyboard. You read that correctly… a full-blown PC, inside of a freaking keyboard. It will connect to a display via Ultra Wideband HDMI, and the keyboard itself will feature a 5″ touchscreen/touchpad, a microphone and speaker and also a LAN port. This one will be very interesting to see hit the market.

Pushing the multi-touch scheme even further, ASUS will also unveil an Eee PC-based tablet PC. It will convert from both a regular notebook to a tablet, and comes in an 8.9″ form-factor. It will be great for those work-hounds on the go. Hopefully we’ll see some decent battery-life out of this one.

We also learned that ASUS is working tightly with Microsoft to make sure Windows 7 works well on all mobile PCs, especially the company’s own Eee PC. An example shown on-stage showed-off the OS running on a netbook with 1GB of RAM, and it ran well for the most part. There are sure to be great things to flourish from this partnership.

That’s all we can report on for now, but I had to get this out there since some of their announcements are pretty amazing. Please refer to our ongoing CES forum thread where we will post updates as we’re able.

Breaking: RIAA Officially Kicks MediaSentry To The Curb

We last reported on MediaSentry, the firm hired by the RIAA to snoop around various P2P networks for potential lawsuit targets, when they accidentally targeted the distribution network of Revision3, a legitimate content provider, causing a disruption in Revision3’s service. Recently, however, rumors began to circulate that the RIAA (Recording Industry Association of America) was severing ties with MediaSentry (a division of SafeNet Inc.) for reasons unknown.

According to a new story published less than two hours ago by the Wall Street Journal, those rumors had their basis in fact. The RIAA does in fact plan to stop working with MediaSentry to identify ripe lawsuit targets. However, this doesn’t mean that the RIAA is abandoning the practice of monitoring P2P networks. Instead, the industry group plans to work with the Danish firm DtecNet Software ApS, which claims to have a more advanced means of determining whether a person shared a particular song. MediaDefender’s tactics were only capable of determining that defendants had shared the music file with them, with no proof that the song had been shared indiscriminantly, a fact that had caused the RIAA some trouble in court earlier.

Mr. Beckerman cites MediaSentry’s practice of looking for available songs in people’s file-sharing folders, downloading them, and using those downloads in court as evidence of copyright violations. He says MediaSentry couldn’t prove defendants had shared their files with anyone other than MediaSentry investigators.

Rumor: Microsoft Prepares To Slash 15,000 From Workforce

Call it a sign of the times, or call it something bigger – according to DailyTech, rumors have begun circulating that suggest Microsoft plans to cut 15,000 employees in the month of January, in a massive cost-cutting move that targets underperforming segments of their business. At present, the cuts remain the stuff of rumor, but for some Microsoft employees, it’s like finding the memo in the ‘out’ tray of the office copier.

At least Microsoft was charitable enough to wait until the first normal business week of January, after the holidays were over, to make any job cuts that may be announced as part of a potential restructuring plan. It’s suspected that any restructuring that occurs may focus on the company’s MSN (Microsoft Network) division, which manages its network of MSN-branded portal sites, such as MSN Money and MSN Autos.

Whether Microsoft is simply feeling the pinch of the current recessive American economy or something larger is to blame, many believe that Microsoft will need to be more focused on doing what it does best, instead of simply trying to own everything in sight that looks profitable. Yet, according to the DailyTech article, analysts don’t predict that Microsoft will simply ‘shut down’ divisions as part of a restructuring plan, but would prefer to sell them instead.

Microsoft is looking hard at areas where it can make money or save money. Making money is the reason that Microsoft keeps postponing the retirement of Windows XP. XP is huge in the netbook market thanks to the fact that most netbooks won’t run Vista and Linux isn’t appealing to many customers.

Richard Stallman, Free Software, and 25 Years

In an article on TechRadar, the GNU founder and SourceForge supporter, Richard Stallman states his views of his now 25 year old program with GNU to help support and develop free software for all, his criteria for what he considers free software, and why Tivo sucks. (And that barely get the article going.)

What is surprising to me is that the bulk of some of we consider today to be free still comes with some proprietary software packaged in courtesy of NVIDIA and the likes for hardware and other needs. The proprietary bit of code comes with licenses, and handling the legal aspects alone while developing open source software can be difficult. Can Linux distros ever really compete and become popularized while competing in a market stacked against them? If it is up to Richard Stallman and people like Steven Fry, maybe.

Projects like OpenOffice are always helping the cause for free software, but the recent release of 3.0 was not as ground breaking as the release of Firefox 3.0. And While Android is carving a niche for itself in the cellphone world, there are still some people completely clueless to this “open source thing” that is finally starting to stumble awkwardly into the spotlight.

It is a bit old, but you can view a video of Steven Fry discussing the GNU project’s 25th anniversary here. (http://www.gnu.org/fry/)

25 years after Stallman first set the GNU project in motion, what have these ideals achieved, and what can we do to ensure the future of free software? Linux Format spoke to him to find out.

While Linux Torvalds gets most of the plaudits nowadays for the Linux kernel, it was Stallman who originally posted plans for a new, and free, operating system. Free had nothing to do with the cost of the operating system, but with the implicit rights of those who were using the software to do with it exactly as they pleased.

China Busts Counterfeit Ring, Convicts 11

It goes without saying that piracy of any sort is a huge issue, from movies to music to software, and sometimes, even to hardware. In the US and Canada though, we are well aware of what can happen to software pirates, as through the years, many have been carted off to prison for an uncomfortable sentence, even if they weren’t selling the software, but only making it available.

One common conception though, is that China couldn’t care less about the piracy issue in their country, but stories leaking out now contradict those beliefs. Just last week, a Shenzhen court handed out 11 penalties to people tied to a sophisticated counterfeit ring that’s existed for years. They mass-produced Microsoft software, specifically it seems, and distributed it around the world – not just China.

The sentences are somewhat modest, ranging between 18 months to 6 years, but the move should take a nice chunk out of piracy in China, and maybe other parts of the world… at least for a little while. Microsoft is quoted as being very pleased with the sentences, and they should be, since supposedly, they are the stiffest ones ever handed out.

The counterfeit products produced were of such high quality that they were difficult to tell from authentic software. The counterfeit goods were packaged in similar packaging and even had counterfeit Microsoft authenticity certificates. The counterfeit ring produces software like Windows XP and Office 2007 and was broken up in July of 2007 according to The New York Times.

Windows 7 to Bundle Popular Video Codecs

Given I’m not a Macintosh user, I’m unsure of this situation on that platform, but I think for the most part, many people well-understand how things go down with a fresh Windows installation. Once the OS is installed, the quest for normality is far from over. We’ll need to go online and download a variety of patches, updated drivers, and if you plan to watch videos, a robust player and some codecs. With Windows 7, Microsoft hopes to take some of the hassle out of the video issue for people, by including a few of the most common codecs within.

Included will be DivX support, which is great given so much of the web offers downloadable videos in that format. In addition, H.264 and AAC audio will also be supported, meaning most high-definition downloadable video will be good to go right away. With these additions, will we also see Adobe Flash built-in? Not surprisingly, no. Bundling that would cause a conflict of interest, given Microsoft develops a competing technology (Silverlight).

These additions do a good job of making Windows 7 look even better though. When Vista came to launch, the vast majority of people were skeptical, and not really too excited. Windows 7 is a stark contrast, with clear excitement being seen all over the Internet. As we did with the Vista launch, we’ll be sure to cover 7 as we get a little bit closer to launch, taking a look at things from both a performance and usability perspective.

The ability to play back these additional formats has implications for new Windows 7 services like libraries and networked media player support, as Windows 7 users can index and search across their iTunes media without needing to use iTunes as the default player, and can send a wider variety of media content to a centralized location.

Zunes Attempt to Shun 2009

Some people spend the last day of the year differently than others. Some might prepare their resolutions, while others treat it like any other day. If you are a Zune user, you might very-well not be listening to your music today. Although the reason for the mass suicide isn’t verified by Microsoft, some feel that it has to do with this year being a leap year, and in some small way, that makes sense.

Or does it? The reports at that some Zunes are simply dying off at around 2:00AM today, which if EST time, would be midnight PST. The good thing is that all of the Zunes should begin working tomorrow (we can hope), but I am pretty interested in the real reason this is happening. If a Zune can die off simply because of a date issue, that doesn’t give me much reason to believe in the stability of the product.

If it is indeed a date issue, Microsoft will likely issue a firmware update for the device, but that might not matter to many people. By the time the next leap year rolls around, we’ll likely be toting around 3TB Zunes (don’t quote me, my assumptions have been known to be absurd).

Update: Whoops, it seems my math was a little (lot) off. 2:00AM EST would be 11:00PM PST, not midnight. Thanks to Johnny for the correction.

According to reports this isn’t a few 30GB Zune’s that have failed, the vast majority of the devices have the same exact failure symptoms and have been reports by hundreds of owners according to Gizmodo. At this point little is known as to what is causing the mass failures of the devices. Once Microsoft is up and running for the workday, perhaps we will get more information on the issue.