- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tracing The Groundwork Of NVIDIA’s Turing Architecture

With the release of NVIDIA’s Turing-based GeForce RTX cards being imminent, we get a chance to look over the underlining architecture that makes up the most complex system we’ve ever seen. With so many new technologies from different fields interwoven, Turing has a lot riding on it, and it makes us ask, is this the start of real-time ray tracing?

With the official announcement back at SIGGRAPH for the Quadro RTX lineup, and then a couple of weeks later at Gamescom for the GeForce RTX, NVIDIA’s Turing architecture is shaping up to be quite a large departure from the releases we’ve seen in the past.

Turing is the encapsulation of more than a decade of research, and brings together technologies from a wide range of fields.

While the RTX branding on the cards signifies the coming of real-time ray tracing, there is a lot of underlining technologies at work to bring about this release, and quite a few had nothing to do with ray tracing, at least to begin with.

The Turing architecture is quite a complex beast, and is vastly more complex than anything that’s come before it. It’s not just breaking new ground in trying to deliver real-time ray tracing in games, but also pushing assisting technologies in the field of deep learning. It builds off the foundations of CUDA which has been in continuous development for over 10 years, leading up to the release of Pascal.

Turing introduces Tensor Cores that were part of Volta, as well as asynchronous compute between integer and float calculations. There’s been the explosion in AI research from deep learning, building neural network models to perform specialized functions extreme quickly, which can be inferenced on the CUDA or Tensor Cores. And finally with Turing, we have the dedicated RT Cores for ray tracing, giving name to the RTX branding.

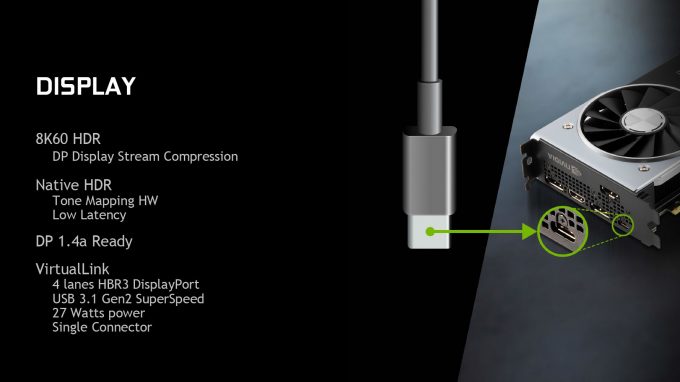

The Turing platform also extends beyond the GPU itself, as we see the first discrete graphics cards to use GDDR6 memory. There is also a push for a new universal display connector in the form of VirtualLink, which combines USB data transfers, DisplayPort for video, and power delivery capabilities, all combined into a single USB Type-C connector.

The multi-GPU interface SLI will also be replaced with a much faster NVLink connector, something taken from the Quadro line. Oh, and the ditching of the rather inefficient blower-style cooler for a dual axial fan design, at least for the GeForce cards.

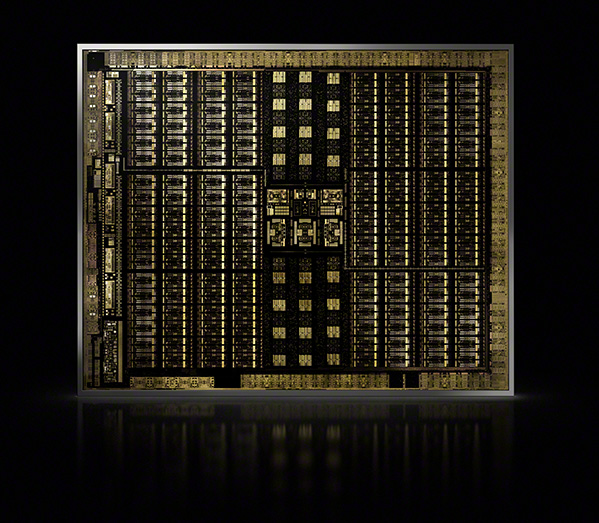

Before covering the specifics of each chip in the generation, such as with TU102 and TU104, at the heart of the RTX 2080 Ti and the RTX 2080 respectively, we’re going to concentrate more on the foundations of what goes into each chip, first.

Asynchronous Compute

In what will be a big boon to a number of games, and less so to others, is a restructuring in how Turing handles asynchronous compute. NVIDIA has historically had issues with mixed workloads, long before Pascal, and it was a sore point even when it was introduced with Kepler and somewhat addressed with Maxwell.

When having to switch between graphics processing and compute, such as with physics calculations or various shaders, the GPU would have to switch modes, and this incurred quite a substantial performance loss due to the latency in the switch. Pascal made this switch almost seamless, but it was still there.

Turing on the other-hand, now finally allows concurrent execution of both integer and floating point math. Better still, they can now share the same cache. On paper, this results in a 50% boost in performance per CUDA core in mixed workloads. How this translates into real-world performance will be very different, but we expect to see quite a few games gain tangible improvements from this alone.

Games like Ashes of the Singularity will respond well to the changes, as well as games with mixed workloads, notably those that make use of DX12, but this is something we won’t see the full effect of until later. Generally though, a lot of games will see some kind of uptick in performance just from this change alone.

Tensor Cores

Bringing Tensor Cores into the mix with Turing is both surprising and expected at the same time. Tensor is something we’ve touched on in the past with the launch of the Volta-based Titan V, GV100, and Tesla cards. Tensor Cores are extremely fast, low precision integer units used for matrix computations, and at the heart of the deep learning revolution.

Tensors do quick and dirty calculations, but a whole mass of them at the same time, and are used in both building neural networks and inferencing a result. From a compute point of view, they make sense, but games don’t make much use of such low precision calculations, typically favoring FP32, rather than the INT8 of the Tensor Cores. So why bring them to a gaming/graphics card?

Tensor Cores on Turing are not so much about the deep learning, but more about inferencing, i.e. putting the network to work rather than teaching it how to do something. If you’ve kept up with any of the professional graphics announcements that NVIDIA has made over the last couple of years, you may have heard about denoising in ray tracing. For those unaware, it might be worth your time to read up on it a bit, but effectively it’s about guessing the value of a pixel in a given scene, rather than calculating it directly. In part, it’s this ‘guesswork’ from the Tensor Cores that allows the RTX cards to do real-time ray tracing.

There are other uses for the Tensor Cores as well, and for games, it centers around the use of post-processing effects and upscaling. At SIGGRAPH we saw a number of upscaling demos, in which low-resolution images and movies were upscaled to a much higher resolution, with almost no visible artifacts.

How this translates over to games is with Deep Learning Super Sampling (DLSS), something you’ll likely see more of going forward. This will work as a high quality anti-aliasing mode that works similar to Temporal Anti Aliasing in that it works off of multiple frames and takes scene depth into consideration when smoothing out those jaggies.

The thing with Tensor though, is that it has many uses in games as a post-processing engine, increasing graphical fidelity, or by performing certain actions faster with highly educated guesswork. It has significant potential behind it, but will mostly go unseen. We will see a great deal of new use cases popping up that will make use of them, such as with advanced shading techniques and stylizing. This plays into NVIDIA’s NGX, or neural graphic framework, and will be used for the aforementioned DLSS, a process called AI InPainting – a content-aware image replacement system (think Photoshop), and some oddities like slow-motion playback.

RT Cores

The namesake of the RTX lineup is the all-new RT Cores, which are purpose-built as a ray tracing pipeline, and will plug directly into the new APIs coming from Microsoft’s DXR, NVIDIA’s own OptiX, and the Vulkan ray tracing system. The RT cores sit under the Streaming Multiprocessor (SM) units and are part of the normal rendering pipeline, unlike the Tensor Cores.

When you read through what the RT cores actually do, it’s somewhat deceptive as it appears quite simple. They are dedicated units that accelerate Bounding Volume Hierarchy (BVH) traversal, and ray/triangle intersecting. BVH is the ray trace equivalent of occlusion culling, in that each object is drawn with progressively larger bounding boxes around it, and if a ray intersects a box, it continues to follow the ray until it hits another box. If no boxes are intersected, or stop prematurely before hitting an object, that ray trace is stopped and it moves on to the next ray. This process of setting up bounding boxes for rays means that time isn’t wasted on stuff that isn’t there, and can be culled.

Basically, the RT cores figure out which objects a ray hits, and then tells the SM units that something is there. It then does this recursively, based on the number of times it’s told to ‘bounce’ the ray.

Before the RT core can kick in though, you still need geometry data, material specifications, reflectivity, etc, and all of that is handled by the standard SM units, the RT cores just figure out where the light actually hits based on what’s in view, and then tells the SM that something is there (or not). Tracing out those rays though, is very time-consuming, as multiple rays may be required to light a single pixel in a scene, so by passing it off to dedicated hardware, the SM units are free to carry on working in the background and update the scene as it unfolds.

Despite the dedicated hardware though, the RT cores by themselves are not enough to do full scene ray tracing in real-time – it’s just far too complex at this time, which is why ray tracing on Turing is a two-part process. Ray tracing in real-time is all down to time management, keeping a scene simple enough and limiting the time slot with which it can work. Turing will use the RT Cores to rough out a scene, and then use the Tensor cores with AI denoising to fill out any holes left in the scene that could not be completed in time.

The biggest time saver for ray tracing though, is simply not to use it. And that’s precisely how games will work. New games coming out, even in 2-5 years, won’t have full scene ray tracing, it’s just not going to happen. The vast majority of it will still be rasterized, and ray tracing will only be used where it’s either needed or where it can perform a function better than a raster version. This is why most demos focus on specific use cases, things such as surface reflections, soft shadows, and global illumination in a small room. Pretty much everything else will still be rasterized, but developers will now have a choice.

This isn’t to say that ray tracing is pointless, far from it, it’s just complex and resource hungry, limiting its applications. Movies can use ray tracing because it’s rendered at a more leisurely rate, but since games have a time constraint for real-time animations, then compromises have to be made – which is where rasterization comes in.

The whole point of a raster engine is to generate a close approximation to reality with regard to lighting, so that a scene looks realistic enough. However, as scenes become more and more complex, and more hardware is thrown at the problem, it becomes evident that at some point, approximations are not good enough, or are too costly to implement. It’s at that point that ray tracing becomes a valid option.

With the creation of the ray tracing APIs, only certain parts of a scene need be calculated with ray tracing, while leaving the rest to the raster engine.

New Shaders

There are a number of ‘under-the-hood’ enhancements going on as well. While asynchronous compute holds some big advantages in mixed workloads, there are ways to improve the flow of data and geometry through the system. Mesh shading effectively aggregates the vertex, tessellation, and geometry data into a single volume, which can share resources amongst each other. NVIDIA lists the main benefit of this as reducing draw calls to the CPU.

Variable Rate Shading (VRS) is, as its name suggest, a system of dynamically adjusting the rate of which objects are shaded (pixels filled in). Objects that are not clearly visible can be partially shaded, and objects central to the camera in bright areas can get more shading passes to improve quality. By adjusting how much time is spent processing certain areas in a scene, higher frame rates or better visual quality can be achieved.

The classic example for this would be with something called foveated rendering, where in virtual reality only where the user is looking will be rendered at full quality, leaving the periphery in a low-quality state. The problem with this setup is that it requires developer intervention to enact, which means only specialized games, and where the engine has handy defaults in place, will see any benefit.

New Hardware

GDDR6 is, if we’re going to be perfectly honest, more of a side-note than a headline, despite the RTX cards being the first to use it. In a nutshell, it’s GDDR5X but slightly faster, and uses slightly less power. It’s nothing particularly ground breaking at all. It won’t have the bandwidth of HBM2, but it would seem NVIDIA doesn’t need that kind of performance with its architecture, and as a result, can save quite a large amount of money in the process, since HMB2 is still very expensive.

One interesting change was doubling up on the L2 cache, going from 3MB to 6MB on TU102 GPUs. This all works as to build a more unified processing platform, allowing each subsection to access the same data sets – which was probably required for the asynchronous compute and shaders.

The switch from SLI to NVLink is still somewhat surprising, but this might be a case of not having to reinvent the wheel. SLI and NVLink are basically the same thing, they are a direct connection between two cards that allows them to communicate much faster than if they were to go over the PCIe bus (even if the interface itself is similar to PCIe). However, NVLink is much faster, up to 100GB/s of bidirectional bandwidth (for TU102, 50GB/s for TU104), but it can do something that SLI never could, resource pooling.

One of the main issues with SLI was that the resources were not entirely combined. The full computation of the cards was available, but the memory was cloned, so with two 8GB framebuffers, you didn’t have 16GB to play with, as all the assets had to be cloned, and could not be shared. NVLink however, actually allows for shared memory (which is why the bandwidth of the link is so high).

Games may still render faster with cloned resources to prevent the extra hop over the interface, but compute workloads, and specifically ray tracing, will benefit greatly from the shared resources. And that’s why SLI was replaced with NVLink, so that ray tracing can properly leverage the full extent of the hardware. But for normal games, we’re unlikely to see this taking off, much the same as SLI before, as it still requires game developer to allow for SLI profiles.

VirtualLink has been brought up a few times, and you can read more about it in a news post we did previously. It’s not something that will see immediate use, but it lays the groundwork for next generation Head Mounted Displays (HMD), plus an emerging market for mobile monitors.

Turing’s also had some video codec tweaks in both decode and encode. Single cable DisplayPort will support 8K/60 natively, without having to use dual cables, and will even work over VirtualLink. HDR is supported natively too, along with tone mapping down to an 8-bit display (fake HDR on a standard display). The NVENC encoder has been overhauled and can handle real-time encoding of 8K video at 30 FPS. The decoder will work with HEVC 12-bit HDR too. In general this means better support for upcoming formats, better performance for HEVC, and higher quality encoding for game streaming to Twitch and YouTube, at higher resolutions (when available).

TU102, TU104, And TU106

Now for the cards and about the specific of the GPUs. The breakdown of each GPU is fairly complicated, as there is more to it than changes in clock speeds and how many SM units each has, as it also extends to individual features. A good example of this is that the TU106 GPU, which will be at the heart of the RTX 2070, won’t support NVLink.

If you look at the chart below, you can see how the GeForce Turing cores are segmented from Quadro Turing cores, and how they compare against the older Pascal cards.

| NVIDIA GeForce Series | Cores | Freq | RT | Memory | Mem Bus | Mem Bandwidth | GPU Core | SRP |

| Quadro RTX 6000 | 4608 | 1730 MHz* | 10.0 GR/s | 24GB | 384-bit GDDR6 | 672 GB/s | TU102 | $$$$$ |

| GeForce RTX 2080 Ti | 4352 | 1545 (1635 FE) MHz | 10.0 GR/s | 11GB @14Gbps | 352-bit GDDR6 | 616 GB/s | TU102 | $999 |

| GeForce GTX 1080 Ti | 3584 | 1582 MHz | 1.21 GR/s | 11GB @ 11Gbps | 352-bit G5X | 484 GB/s | GP102 | $699 |

| Quadro RTX 5000 | 3072 | 1630 MHz* | 6.0 GR/s | 16GB | 256-bit GDDR6 | 448 GB/s | TU104 | $$$$ |

| GeForce RTX 2080 | 2944 | 1710 (1800 FE) MHz | 8 GR/s | 8GB 14Gbps | 256-bit GDDR6 | 448 GB/s | TU104 | $699 |

| GeForce GTX 1080 | 2560 | 1733 MHz | <1GR/s | 8GB @ 10Gbps | 256-bit G5X | 352 GB/s | GP104 | $599 |

| GeForce RTX 2070 | 2304 | 1620 (1710 FE) MHz | 6 GR/s | 8GB | 256-bit GDDR6 | 448 GB/s | TU106 | $499 |

| GeForce GTX 1070 | 1920 | 1683 MHz | <1 GR/s | 8GB @ 10Gbps | 256-bit GDDR5 | 352 GB/s | GP104 | $379 |

| RT = ray tracing gigarays estimated processing | ||||||||

What will catch a number of people out is that the Quadros are fully unlocked cards that have all SM units enabled, while the GeForce cards do not. This is the same situation as with the Pascal launch, which later resulted in a lot of upset users, specifically when the TITAN X pascal was superseded by the 1080 Ti, only to have another TITAN Xp come out later. Since the GeForce RTX cards listed here don’t have the entire GPU unlocked, then we’re likely to see a similar situation.

For example, a 2070 Ti may come out later with a partial TU104 GPU instead of TU106, a TITAN T(or X-3, or Xt, or XXX, or another TITAN) may come out with a full TU102 GPU. This does leave a bit of an odd position for the RTX 2080, which uses a cut back TU104, and the 2080 Ti uses a cutback TU102 instead of a full TU104 (which is quite different from the past). Does this mean we’ll see an RTX 2080 v2 later?

What most will be wondering though, is how will the like-for-like cards compare from Pascal to Turing, e.g. GTX 1080 Vs RTX 2080? For that, you’ll have to wait for the reviews, but just from an educated guess, the Turing cards will be faster, simply because they have more SM units, more CUDA cores, asynchronous compute enabled, and faster memory – it’s pretty much a given at this point. But how much faster, and if it’s worth the price difference, we’ll have to wait and see. What will be interesting though, is the gap between an RTX 2070 and a GTX 1080.

The performance impact with games with ray tracing enabled will also be hard to judge as well, since currently, the only game to have RTX enabled is Shadow of the Tomb Raider, everything else won’t come out for months (Battlefield V and Metro Exodus), which will require its own testing later.

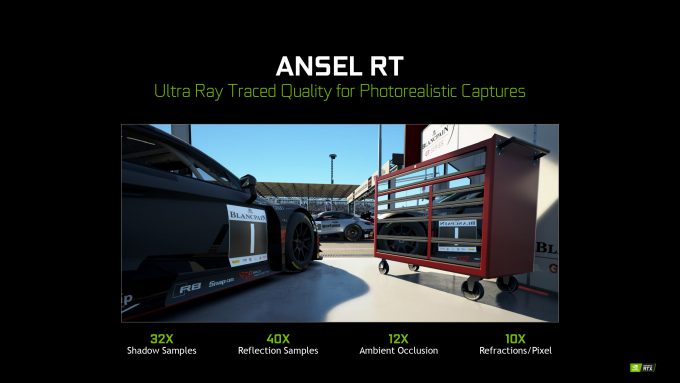

The Turing launch also brings with it updates to GeForce Experience as well, where updated versions of Ansel will be shown, allowing you to perform extended ray tracing with the screen capture camera utility, as well as super scaling of the image. The updated video codecs will also improve the quality of streams to Twitch and YouTube as well.

We can’t really provide any final thoughts, simply because there is still a lot of speculation and unknowns at this point. What we can say is that we are quite excited about the path to ray tracing, and whether or not we’re actually going to see the industry start to pick up on it. However, when we look at the state of DX12 and Vulkan adoption, we do have to temper our enthusiasm.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!